-

As an optimization reducing latency for both, read and write requests, Dynamo allows client applications to be coordinator of these operations. In this case, the state machine created for a request is held locally at a client. To gain information on the current state of storage host membership the client periodically contacts a random node and downloads its view on membership. This allows clients to determine which nodes are in charge of any given key so that it can coordinate read requests. A client can forward write requests to nodes in the preference list of the key to become written. Alternatively, clients can coordinate write requests locally if the versioning of the Dynamo instance is based on physical timestamps (and not on vector clocks). Both, the 99.9th percentile as well as the average latency can be dropped significantly by using client-controlled request coordination. This “improvement is because the client.driven approach eliminates the overhead of the load balancer and the extra network hop that may be incurred when a request is assigned to a random node”, DeCandia et al. conclude (cf. [DHJ+07, p. 217f]).

-

As write requests are usually succeeding read requests, Dynamo is pursuing a further optimization: in a read response, the storage node replying the fastest to the read coordinator is transmitted to the client; in a subsequent write request, the client will contact this node. “This optimization enables us to pick the node that has the data that was read by the preceding read operation thereby increasing the chances of getting “read-your-writes” consistency”.

-

A further optimization to reach Amazon’s desired performance in the 99.9th percentile is the introduction of an object buffer in the main memory of storage nodes. In this buffer write requests are queued and persisted to disk periodically by a writer thread. In read operations both the object buffer and the persisted data of storage nodes have to be examined. Using an in-memory buffer that is being persisted asynchronously can result in data loss when a server crashes. To reduce this risk, the coordinator of the write request will choose one particular replica node to perform a durable write for the data item. This durable write will not impact the performance of the request as the coordinator only waits for W

1 nodes to answer before responding to the client (cf. [DHJ+07, p. 215]).

1 nodes to answer before responding to the client (cf. [DHJ+07, p. 215]).

-

Dynamo nodes do not only serve client requests but also perform a number of background tasks. For resource division between request processing and background tasks a component named admission controller is in charge that “constantly monitors the behavior of resource accesses while executing a “foreground” put/get operation”. Monitoring includes disk I/O latencies, lock-contention and transaction timeouts resulting in failed database access as well as waiting periods in the request queue. Via monitoring feedback, the admission controller will decide on the number of time-slices for resource access or consumption to be given to background tasks. It also coordinates the execution of background tasks which have to explicitly apply for resources with the admission controller (cf. [DHJ+07, p. 218]).

4.1.5. Evaluation

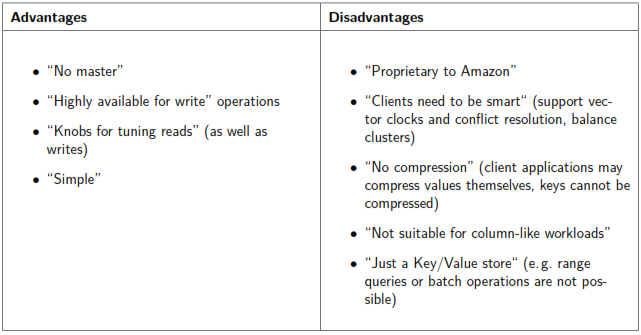

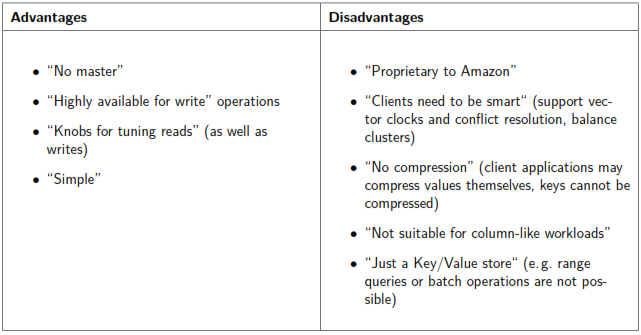

To conclude the discussions on Amazon’s Dynamo, a brief evaluation of Bob Ippolito’s talk “Drop ACID and think about Data” shall be presented in table 4.2.

Table 4.2.: Amazon’s Dynamo – Evaluation by Ippolito (cf. [Ipp09])

4.2. Project Voldemort

Project Voldemort is a key-/value-store initially developed for and still used at LinkedIn. It provides an API consisting of the following functions: (cf. [K+10b]4):

-

get(key), returning a value object

-

put(key, value)

-

delete(key)

Both, keys and values can be complex, compound objects as well consisting of lists and maps. In the Project Voldemort design documentation it is discussed that—compared to relational databases—the simple data structure and API of a key-value store does not provide complex querying capabilities: joins have to be implemented in client applications while constraints on foreign-keys are impossible; besides, no triggers and views may be set up. Nevertheless, a simple concept like the key-/value store offers a number of advantages (cf. [K+10b]):

-

Only efficient queries are allowed.

-

The performance of queries can be predicted quite well.

-

Data can be easily distributed to a cluster or a collection of nodes.

-

In service oriented architectures it is not uncommon to have no foreign key constraints and to do joins in the application code as data is