C H A P T E R 8

Software Testing

8.1 Introduction

Testing is done to ensure that the software does what it is intended to do and to discover any defects the program has before it is put to use. When you test software, you execute a program using artificial data. You check the results of the test run for errors, anomalies and information about the program’s non-functional attributes.

The testing process has two distinct goals:

1) To demonstrate to the developer and customer that the software meets its requirements. For custom software, this means there will be at least one test for every requirement listed in the requirements document. For generic software products, there will be tests for all system features and for a combination of all the features that will be incorporated in the product release.

2) To discover situations in which the behavior of the software is incorrect, undesirable and does not conform to its specification. These are the results of software defects. Defect testing roots out all undesirable system behavior such as system crashes, unwanted interactions with other systems, incorrect computations and data corruptions.

The first goal leads to validation testing where you expect the system to perform correctly using a given set of test cases that reflect the system’s expected use. The second goal leads to defect testing where the test cases are designed to expose defects. During validation testing, you will find defects in the system. During defect testing, some of the tests will show that the program meets its requirements.

Testing is a part of the broader process of verification and validation (V & V). Verification and validation ensure checking that the software meet its specification and delivers the functionality required by the customers paying for the software. The checking processes start as soon as the requirements become available and continue through all stages of the software development process.

The aim of verification is that the software meets its functional and non-functional requirements. Validation, on the other hand, is a more general process. The aim of validation is that the software meets its customer’s expectations.

The ultimate goal of the verification and validation process is to establish confidence that the software is a good fit for the required purpose. The level of the required confidence depends on the system’s purpose, the expectations of the system users and the marketing environment for the system.

1) Software purpose: The more critical the software, the more important that it is reliable.

2) User expectations: As software matures, users expect it to become more reliable so that more thorough testing of later versions of the software may be required.

3) Marketing Environment: When a system is marketed, the sellers of the system must take into account competing products, the price that customers are willing to pay for a system and the required schedule for delivering the system.

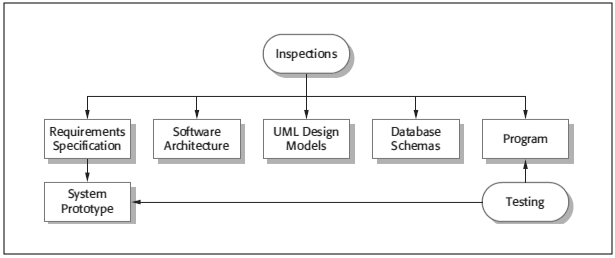

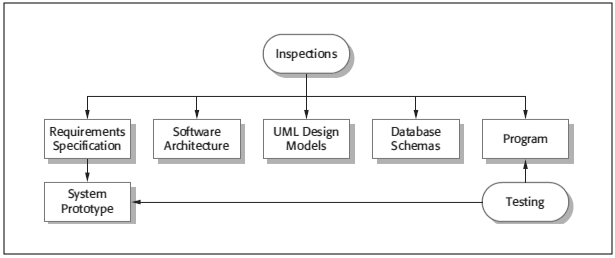

Other than software testing, the verification and validation process also involves inspections and reviews. Inspections and reviews analyze and check the system requirements, design models, program source code sand even proposed system tests. The following figure shows inspections and testing. The arrows indicate the stages in the process where these techniques may be used.

There are advantages of inspections over testing. These are:

1) A single inspection session may uncover a lot of errors in the system because inspection is a static process and you don’t have to be concerned about the interactions between errors.

2) Incomplete versions of the system may be inspected without additional costs.

3) As well as searching for program defects, an inspection can also consider the broader quality attributes of a program such as the compliance with standards, portability and maintenance.

Fig. 8.1: Inspections and Testing

However, inspections cannot replace software testing. Inspections are not good for discovering defects that arise because of unexpected interactions between different parts of a program, timing problems or problems with system performance. This is where testing and testing processes come into the picture.

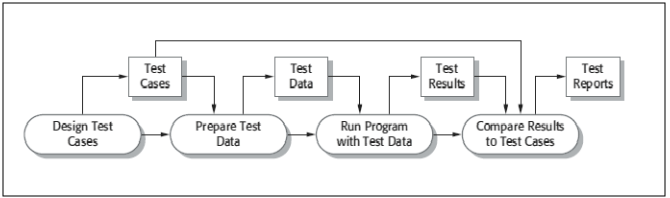

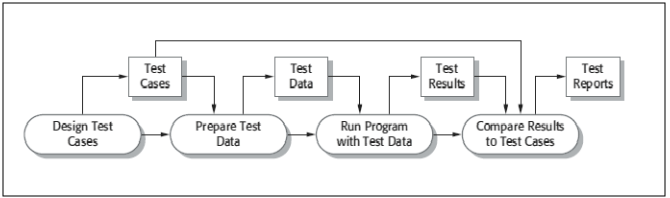

The following figure is an abstract model of the traditional testing process, suitable for plan-driven development. Test cases are input specifications to the test and output specifications (test results) from the test and including a statement of what is being tested. Test data and test execution can be automated but automatic test case generation is impossible as people who understand what the system is supposed to do must be involved to specify expected test results.

Fig. 8.2: A model of the software testing process

Typically a commercial software system has to undergo three stages of testing:

1) Development testing where the system is tested during development to discover bugs and defects. System designers and programmers are involved in the testing process.

2) Release testing where a separate testing team tests a complete version of the system before it is released to the users. The objective of release testing is to check that the system meets the requirements of the clients.

3) User testing where potential users test the system in their own environment who decide if the software can be released, marketed and sold.

8.2 Development Test

Development testing includes all testing activities carried out by the team developing the system. For critical systems a formal process is used with a separate testing group within the development team. They are responsible for developing tests and maintaining detailed records of test results.

During development testing, it may be carried out at the following three levels:

1) Unit testing where individual program units or object classes are tested. Unit testing should concentrate on functionality of objects or methods.

2) Component testing where several individual units are integrated to create composite components. Component testing should focus on testing component interfaces.

3) System testing where some or all of the components are integrated and the system is tested as a whole. System testing should focus on testing component interactions.

Development testing is actually a defect testing process where the aim is to discover bugs in the software. It is, therefore, interleaved with debugging which is the process of locating problems with the code and changing the program to fix these problems.

8.2.1 Unit Testing

Unit testing is the process of testing program components such as objects or methods classes. Individual methods and functions are the simplest type of components. Your tests should include calls to these routines with different input parameters.

When you are testing object classes, your tests should cover all features of the object. This means you should:

1) Test all operations associated with the object.

2) Set and check all the attribute values associated with the object.

3) Put the object into all the possible states. This means you should simulate all events that cause a state change.

Whenever possible you should automate unit testing. An automated test has three parts:

1) A setup part where you initialize the system with the test case namely, the inputs and expected outputs.

2) A call part where you call the object or method to be tested.

3) An assertion part where you compare the result of the call with the expected output. If the assertion has evaluated to be true, the test is a success otherwise it has failed.

Sometimes objects can slow down the testing process. This is where the mock objects come into the picture. Mock objects are objects with the same interface as the external objects being used that simulate its functionality. Therefore, a mock object simulating a database may have only a few linear data items organized in an array. So they can be accessed quickly without the overheads of calling the database and accessing disks, speeding up the test process unlike in the case of external objects.

8.2.2 Choosing Unit Test Cases

As testing is expensive and time consuming, it is important that you choose unit effective test cases.

Two effective strategies that help you choose unit test cases are:

1) Partition testing where you identify groups of inputs that have common characteristics and that should be processed in the same way. You should choose tests from within each of these groups.

2) Guideline-based testing where you choose test cases based on testing guidelines. These guidelines include past experiences of programmers who made errors and mistakes while developing components and how they rectified them.

8.2.3 Component Testing

Software components are composite components that are made up of interacting objects. Software component testing should, therefore, focus on showing that the component interface behaves in accordance with its specification. You can assume that the unit tests on the individual objects within the component have been completed.

There are different interfaces between program components and therefore, different types of interface errors can occur. These are:

1) Parameter interfaces: These are interfaces in which data and function references are passed from one component to another. Methods in an object have a parameter interface.

2) Shared Memory interfaces: These are interfaces in which a block of memory is shared between components. Data is placed in memory by one subsystem and retrieved from there by other subsystems.

3) Procedural interfaces: These are interfaces in which one component encapsulates a set of procedures that can be called by other components. Objects and reusable components have this type of interface.

4) Message Passing interfaces: These are interfaces in which one component requests a service from another component by passing a message to it. A return message includes the results of executing the service. Some object-oriented interfaces such as, client-server systems have this type of interface.

Interface errors are one of the most common sources of errors in complex systems. These errors fall into three types of classes:

1) Interface use: A calling component calls another component and makes an error in the use of its interface. This type of error is common with parameter interfaces where parameters are of the wrong type, or passed in the wrong order, or the wrong number of parameters may be passed.

2) Interface misunderstanding: A calling component misunderstands the specification of the interface of the called component and makes assumptions about its behavior. The called component may not behave as expected which then causes unexpected behavior in the calling component. For instance, the binary search method may be called with a parameter that is an unordered array. The search may then fail.

3) Timing errors: These errors occur in real-time systems that use a shared memory or message-passing interface. The producer and consumer of data may operate at different speeds. Under these circumstances, the consumer of data may access out- of-date data because the producer of data has not updated data at the shared interface

8.2.4 System Testing

System testing involves integrating the components to create a version of the system and then testing the integrated system. System testing checks that components are compatible, interact correctly and transfer the right data at the right moment across their interfaces. It obviously overlaps with component testing but there are two major differences:

1) During system testing, reusable components that have been separately developed and off-the-shelf systems are integrated with newly developed components. The whole system is then tested.

2) Components are developed by different team members or groups and may be integrated at this stage. System testing is a combined rather than an individual process. In some companies, system testing may be carried out by a separate testing team without involvement from the designers and programmers.

System testing should focus on testing the interactions between components and objects that make up the system. This interaction testing should reveal component bugs when one component is used by other components in the system. Because of its focus on interaction testing, use case-based testing is an effective approach to system testing. Typically, each use case is implemented by several components or objects in the system.

8.3 Test-Driven Development

Test-driven development is an approach to program development in which you interleave testing and code development. In the process you develop code incrementally along with a test for that increment. You don’t move on to the next increment until you have tested the current increment. Test-driven development was developed as part of the agile processes such as Extreme Programming. However, it can be used in plan-driven development processes as well.

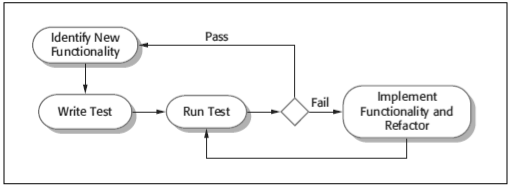

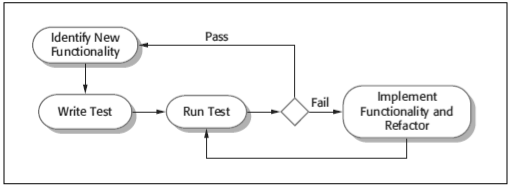

Fig. 8.3: Test-Driven Development Process

The fundamental TDD process is shown in the above figure. The steps in the process are as follows:

1) You should start identifying the increment of functionality that is required. This should normally be small and implementable in a few lines of code.

2) You write a test for this functionality and implement this as an automated test. This means the test can be executed and will report whether it has passed or failed.

3) You then run the test along with all other tests that have been implemented. Initially you have not implemented the increment and the new test will fail. This is deliberate as it shows the test adds something to the test set.

4) You then implement the functionality and re-run the test. This means refactoring the existing code to improve it and add new code to what’s already existing.

5) Once all tests run successfully, you move on to implementing the next chunk of functionality.

As well as better problem understanding, other benefits of test-driven development are:

1. Code Coverage : Generally every code segment you write you should have at least one associated test. Therefore, you can be confident all of the code in the system has actually been executed. Code is tested as it is written. Therefore, defects are discovered early in the development process.

2. Regression Testing: A test suite is developed incrementally as a program is developed. You can always run regression tests so that changes to the program do not introduce new bugs.

3. Simplified Debugging: When a test fails, it should be obvious where the test fails. The newly written code should be checked and modified. You don’t need to use debugging tools to locate the problem.

4. System documentation: The tests themselves act as a form of documentation that describe what the program should be doing. Reading the tests make it easier to run the code.

8.4 Release Testing

Release testing is the process of testing a particular release of the system that is intended for use outside of the development team. Normally the system release is for customers and users. For software products, the release could be for product management who then prepares it for sale.

There are two important differences between release testing and system testing:

1) A separate team that has not been involved in the system development is responsible for release testing.

2) System testing by the development team should focus on discovering the bugs in the system (defect testing). The objective of release testing is to check that the system meets its requirements and is good enough for external use (validation testing).

The primary goal of the release testing process is to convince the supplier of the system that it is good enough for use. If yes, it can be released as a product or delivered to the customer. The release testing has to show that the system delivers its specified functionality, performance and reliability, and that it doesn’t fail during normal use. It should take into account all the system requirements, just not the requirements of the end users of the system.

8.4.1 Requirements-Based Testing

A general principle of good requirements engineering practice is that requirements should be testable. A requirement should be written so that a test can be designed for it. A tester can check that the requirement has been satisfied. Requirements-based testing is a validation rather than defect testing – you are trying to validate that the system has adequately implemented its requirements.

However, testing a requirement does not necessarily mean writing a single test. You normally have to write several tests to ensure that you have coverage of the requirement. In requirements-based testing you should also be able to link the tests to the specific requirements that are being tested.

8.4.2 Scenario Testing

Scenario testing is an approach to release testing where you devise typical scenarios of use and use these to develop test cases for the system. A scenario is a story, which describes one way in which the system might be used. Scenarios should be realistic and real system users should be able to relate to them. If you have used scenarios as part of the requirements engineering process, you may be able to use these as testing scenarios.

When you use a scenario-based approach, you are normally testing several requirements within the same scenario. Therefore, along with checking individual requirements, you are also checking that combinations of requirements do not cause problems.

8.4.3 Performance Testing

Once a system has been completely integrated, it is possible to test for aroused properties such as, reliability and performance. Performance tests have to be carried out to ensure that the system can process its intended load. This usually involves running a series of tests where you increase the load until the system performance becomes unacceptable.

As with other testing, performance testing involves both with demonstrating that the system meets its requirements and discovering problems and defects in the system. To test whether the performance requirements are being achieved, you may have to create an operational profile. An operational profile is a series of tests that will reflect the actual mix of work handled by the system. Therefore, if 90% of the transactions are of type A, 5% of type B and the rest of types C, D and E, then you have to design the operational profile such that the vast majority of tests are of type A. Otherwise the test will not reflect the accurate operational performance of the system.

This approach is not necessarily the best approach for defect testing. An effective way to discover the defects of the system is to design tests around the limits of the test. This test is known as stress testing. For example, to test 300 transactions per second, you start by testing the system fewer than 300 transactions per second and gradually increase the load beyond 300 transactions per second until the system fails. This type of testing has two functions:

1) It tests the failure behavior of the system. Circumstances that may arise through an unexpected combination of events where the load placed on the system exceeds the maximum anticipated load. In these circumstances, system failure should not cause corruption of data or unexpected loss of user services. Stress testing checks that in these situations, the system fails soft rather than collapse under its load.

2) It stresses the system so that the defects are forced to come to light, which would normally not be discovered.

Stress testing is relevant to distributed networked systems. These systems often show degradation when they are heavily loaded. This is where stress testing comes to the picture because they help to discover when degradation begins in these systems so that you can add checks to the system to reject transactions beyond this limit.

8.5 User Testing

User testing is that stage in the testing process in which users or customers provide input and advice on system testing. User testing is essential even when comprehensive system and release testing have been carried out. This is because influences from the user’s working environment have a major effect on the reliability, performance, usability and robustness of a system.

In practice, there are three types of user testing:

1) Alpha Testing: where users of the software work with the development team at the developer’s site to test the software.

2) Beta Testing: where a release of the software is made available to the users to allow them to experiment and raise problems that are discovered as they work with the system developers.

3) Acceptance Testing: where customers test a system in order to decide whether it is ready to be accepted from the system developers and deployed in the customer environment.

In alpha testing, users and developers work together to test a system as it is being developed. This means users can identify problems and issues that are not apparent to the development testing team. Developers can only work from the requirements but these do not necessarily reflect other factors that affect the practical use of the software. Users can, therefore, provide more information about practice leading to the design of more realistic tests.

Beta testing becomes necessary when at times an unfinished version of the software system is made available to the customers and users for evaluation. Beta testers could be a selective group of customers who have adopted the system at the early stages. Alternatively, the software may be made available to the public so that anyone interested can use it for beta testing. Beta testing is, therefore, essential to discover interaction problems between the software and the features of the environment where it is used.

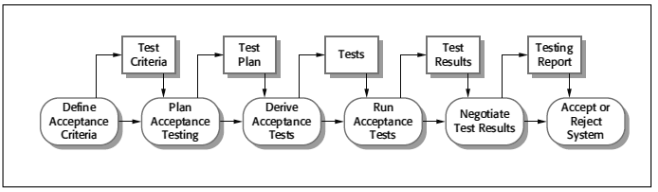

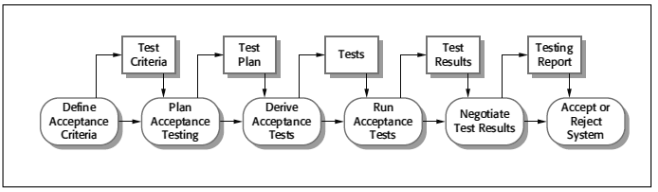

Acceptance testing is an inherent part of the custom systems development. It takes place after release testing. A customer formally tests the system to determine whether it should be accepted from the system developer on payment. There are six stages in the acceptance testing process. These are:

1) Define acceptance criteria: This stage should take place earlier before the contract for the system is signed. The acceptance criteria should be part of the contract and agreed upon between the customer and developer.

2) Plan acceptance testing: This involves deciding on the resources, time and budget for acceptance testing and establishing a testing schedule. The acceptance test plan should cover the requirements and maintain the order in which the system features are tested. It should define risks to the testing process such as system crashes and inadequate performance and how these risks can be rectified.

3) Derive acceptance tests: Once acceptance criteria are defined, tests should be carried out to determine whether the system is acceptable. Acceptance tests should test both functional and non-functional (e.g., performance) characteristics of the system.

4) Run acceptance tests: The agreed acceptance tests are executed on the system. Ideally, this should take place in the actual environment where the system will be used. Therefore, some training of the end users may be required.

5) Negotiate test results: It is unlikely that all the defined acceptance tests on the system will pass and there will be no detected problems. Still, if this is the case, the acceptance testing is complete and the system can be handed over. More often than not, some problems will be revealed. In that case, the customer and developer have to negotiate to decide whether the system is good enough to put into use.

6) Reject/accept system: This is a stage when developers and customer have a meeting to decide whether the system is okay to accept. In case it is not, further development is necessary to fix the detected problems. Acceptance testing should then be repeated once again after the problems have been solved.

In agile methods such as XP, the acceptance testing has a different meaning. In fact, the notion is users decide whether the system is acceptable. In XP, the user is part of the development team (who is an alpha tester) and provides the user requirements as user stories. They are responsible for defining tests which decide whether the developed system supports the user stories. The tests are automated and development does not proceed until the story acceptance tests have been passed. Therefore in XP, there is no scope for a separate acceptance testing activity.

Fig. 8.4: The Acceptance Testing Process