Chapter 1

Introduction

1.1 Where We've Been and Where We Are Going

Hopefully you've been reading along in this book series and are quickly progressing as a developer or at least gaining a significant appreciation for what developers do. While you do not need to have read the rest of the series, it might help. The source code for the PostgreSQL expense tracking example in this book has been uploaded to SourceForge.net and can be found in the xpnsqt project. All source files will be zipped up and placed on http://www.theminimumyouneedtoknow.com for download as well. Unlike most books in this series, there will not be an accompanying CDROM.

While I did not write the books in exactly this order, the correct order of reading would have been:

The Minimum You Need to Know About Logic to Work in IT

ISBN13 9780977086627

The Minimum You Need to Know to Be an OpenVMS Application Developer

ISBN13 9780977086634

The Minimum You Need to Know About Java on OpenVMS

ISBN13 9780977086610

The Minimum You Need to Know About Service Oriented Architecture

ISBN13 9780977086672

The Minimum You Need to Know About Java and xBaseJ

ISBN13 9780982358030

Infinite Exposure

ISBN13 9780977086696

Originally I wrote the OpenVMS Application Developer book to fill a need. Lots of places run OpenVMS and new installations are happening all of the time, yet there is no source for new OpenVMS developers. It wasn't until after that book was published that I started getting feedback about recent college graduates not understanding logic. At that point I decided to make this a series and began writing the logic book.

With the logic and traditional application development books out in the field there was a natural progression. First we covered Java on OpenVMS using the same application we had developed in COBOL, BASIC, FORTRAN, C and C++ in the application book. It was necessary to cover accessing of RMS files and RDB databases via Java along with using FMS as your screen management tool. Seasoned developers wanted to learn some of the new technology and recent college grads only understood new technology without even the faintest clue about how to access the data which actually ran the company.

Next we stepped forward with Java on the Ubuntu platform accessing services presented by OpenVMS on an internal company network. Some of you might be shocked to learn that “The Minimum You Need to Know About Service Oriented Architecture” won a 2008 Best Book Award in the category Business: Computers/Technology/Internet from USA Book News and was a 2009 Eric Hoffer Finalist. There are a lot of books about Service Oriented Architecture on the market, but damned few actually cover exposing the heritage data silos. They usually try to sell you a chunk of middleware and another never ending support contract along with shiny new security holes.

The next book in the series didn't follow a logical progression per se, it came about based upon need. I was in the process of designing and writing a fuel surcharge application which would eventually be released as a Open Source project and should be usable by someone who isn't any smarter than a Microsoft product. That last requirement meant the application had to use its own indexed file system rather than mandate a user install, configure, and become a DBA for some database product.

The XBASE family of file formats are the most widely used throughout the history of PC computing, but the Java language doesn't directly include anything to support it, nor does it include any other usable indexed file system. (Yes, there is now a massive development effort called Java DB, but not at the time I needed it.) That meant I had to learn a library. I decided that if I had to take the time to learn a new library with scarce documentation that I would write a tutorial and make life easier for the next guy.

Unlike the previous books in this series, the xBaseJ book is available in electronic form only. You can find the PDF with the files for the project on SourceForge. You can also find it listed here:

http://www.freeebooks.net/ebook/TheMinimumYouNeedtoKnowAboutJavaand xBaseJ

From an educational perspective, I need to provide you with a book which uses a popular cross platform C++ GUI tool to do some classic standalone development against a database server. Many in the world of IT will call this “ClientServer” development even though both the client and the server are on the same machine. Historically, this type of development occurred prior to anyone even thinking about inventing the Internet, but modern education wise, it is best to cover this topic after covering Service Oriented Architecture.

Why?

Kids today know very little about application development, but they know a lot about surfing the Web and buying things online. It is easier for them to grasp a process which involves some kind of Web page sending data to some back end system (Service Oriented Architecture.) Only a few of them will have had to work with Quicken or some other personal finance/accounting application hosted on their own computer. A student who has actually used an application on a desktop computer which stores data on a remote database without going through the Internet will be a rare find indeed.

“Infinite Exposure” is quite simply a novel which was written in response to an interview question I got for the OpenVMS Application book. While it has gotten some great reviews, it is not part of my technical book writing.

Some of the previous books in this series used the Mega Zillionare application developed over and over again so you could learn the differences and tradeoffs associated with each tool choice. I could bore the living Hell out of you doing that again, but we don't have different tools, only different databases. I'm going to have to deviate from the norm and develop a new application for multiple databases to show you the main issues. Yes, Qt does quite a good job of hiding the database if you happen to be using a relational database, but in most cases, you cannot simply change the driver namerecompileandgo. There are quite a few differences between supported databases.

Don't worry, I know that most of you will be GUI only developers at best and cannot begin to function without the continual hand holding of an IDE (Integrated Development Environment.) While I developed the PostgreSQL version of our application with nothing more than a text editor and the Qt Designer for form layout, our later examples will include using multiple IDEs and provide quite a few project creation screen shots. You will be exposed to QtCreator, QDevelop, Monkey Studio, and Eclipse with the Qt plugin

Our application will be an expense tracking system which initially uses the PostgreSQL database. We will then redevelop the application using Firebird (Borland Interbase) and SQLite. I will spend one chapter covering the use of stored procedures in PostgreSQL and Firebird. Our final technical chapter will cover problems with long queries and threading. It is too bad Oracle hasn't created a Qt plugin to access RDB or I could show you how to use a modern desktop with the world's highest availability database.

We won't cover MySQL. I know the database is popular. I know many of you will want to use it, but it's quite the quagmire right now. By default, it is not a relational database. MyISAM is the default storage engine and is now the only storage engine for the embedded version. InnoDB was purchased by Oracle some time ago and Oracle has started to squeeze that orange.

http://digitizor.com/2010/11/05/innodbdroppedfromoraclemysqlclassicedition/

While InnoDB is still available in the “community” edition, the fact it has been dropped from the Classic edition and InnoDB now has its own embedded/embeddable version seems to indicate the “community” version of MySQL is going to be trapped with a stagnant InnoDB release.

MyISAM creates a lot of data storage and application design problems. It doesn't currently have a boolean type completely implemented and there is no support for foreign key constraints. While the Boolean problem could be considered a nuisance, the lack of foreign key constraints really torpedoes this project. What good are reference tables containing categories and expenses if the database isn't going to enforce referential integrity? I went through this exact same problem eons ago when I used XBASE files for each table. Without an engine, I had to enforce the integrity from the application level. Not such a good thing.

1.2 Not a GUI Tutorial

This book will not be a GUI tutorial nor will it spend much time covering areas of the Qt library which do not pertain to our sample application's database portions. While it is true that many developers could use this as a Qt tutorial along with the manuals Trolltech provides online, that is not the goal of this book.

If you need additional information on the graphical portions of the library along with the philosophy behind it all I can recommend is a pair of excellent books.

“Foundations of Qt Development” ISBN13: 9781590598313

“C++ GUI Programming with Qt 4 Second Edition” ISBN13: 9780132354165

In truth, this book is meant to cover the one area where those books really fell down. It's sad because it is the one area most developers actually need to understand. Very few of us get paid to write painting and drawing applications. The bulk of business development revolves around getting data into a database and getting reports out of the database.

1.3 ClientServer Full Circle.

The Web doesn't live up to its hype for most applications. In many cases you want control, security, and limited deployment. In some cases you simply want a standalone application or an application that you control access via controlling where it gets installed. You don't want information about your new supersecret billion dollar R&D effort some place it could easily be poached. You want that application secured on its own internal network or machine without any connection to the Internet at all.

Standalone programs on standalone personal computers make sharing data a bit tough. Without a network and exposing some portion of your disk storage to outsiders, the options for sharing data are limited to physical media transfers along with dialand deliver options.

Most of your successful standalone development happened with midrange and mainframe computers where hundreds to thousands of terminals were scattered about a company, but they all connected into a single computer. This made exposing the data as simple as granting a user access to the files or databases.

During the late 1980s through the mid1990s ClientServer was both the rage, and the bane of computing. Nobody was ever able to get it right, but everybody kept trying. The real problem with clientserver was application deployment. We didn't have any automated methods of “pushing” updates out to users and most users didn't have the technical ability to perform an installation on their own.

When corporate desktops all ran some form of nonGUI DOS, file servers seemed to be the ultimate solution. Products like Netware provided security, the ability to address much more disk storage than most versions of DOS, and even provided an indexed file system which handled multiuser access. As long as the desktop computer trying to run the application didn't have too much of that precious 640K already consumed, they could run the executables a user could access.

Users wanted more and more sophisticated applications. Developers wanted to use different tools which did more and more of the programming work for them. These tools had various licensing systems which invariably involved installing some kind of encrypted license key file along with a runtime library (RTL) of some kind. While the file server could still be a great place to host the installation files and the actual data created by the application, the days of having a single executable file which everybody could run were pretty much over.

Another drastic thing happened near the end of the 1990s: corporate desktops started to diversify. While a great many people will be ignorant enough to say that Microsoft rules the corporate desktop, they would be wrong. Apple started making some serious inroads due to things the platform could do which Microsoft simply could not. After the disastrous release of Windows Vista, many corporations started loading various flavors of Linux on their company desktops as well as their servers.

Currently, the PC desktop industry is in a state of turmoil. This turmoil will exist for roughly another decade, but it will be good for the industry in general. All but the most hardened supporters of Microsoft have realized the company has a legacy platform which is in the mode of continually shrinking market share. Apple has a knack when it comes to getting people to pay a premium for their stuff, and recently, per the stock market, became bigger than Microsoft on a total_shares * share_price basis. Another player gaining a lot of momentum and respect are the various Linux distributions. After Windows Vista, anything looked stable, so companies gave it a try. Then the economy tanked worldwide. Suddenly company heads started looking at the annual licensing and support costs of Microsoft products and running the numbers against the Linux and Open Source alternatives. When the savings started being $20$120 per employee per year the 10,000+ employee companies started taking note and issuing marching orders.

The turmoil is what lead to the SOA (Service Oriented Architecture) stampede. Some companies needed to get custom applications working on two to three versions of Windows as well as the current Apple OS and three to four different Linux distributions. Rather than fight the cross platform battle they lunged forward at the idea of providing Webbased applications which used Java and other Open Source tools that ran in/on the major browsers for each operating system.

A great deal of effort was put forth by the W3C and many others to make SOA work. A method of deploying services for discovery via WSDL (Web Services Description Language) was created so any application anywhere could find what it needed and simply pluginto the service. The problem was much of this design centered around openness, not privacy and security.

It never ceases to amaze me when young MBAs refuse to write a new application on OpenVMS or even an IBM mainframe. PCs are all they have ever seen, therefore, that must be all there is to use. They are so dead set against creating a “green screen” application which would be able to restrict access to only those who should really see, that they bring us full circle, back to the mid1980s and cross platform development.

1.4 Today's Cross Platform Challenge.

In today's world, cross platform has a slightly different definition. It used to just mean different operating systems on the desktop, but now it extends to handheld devices like netbooks, PDAs (Personal Digital Assistant), and smart phones. In truth, the distinction between cellular phone, PDA, and netbook is becoming blurred by the continual addition of features to smart phones and the emergence of the iPad. Companies are making wide use of VPNs (Virtual Private Networks) on handheld devices so personnel in the field can run applications which access databases secured behind the corporate firewall. They have a need to maintain one application yet have it run on every desktop, netbook, and PDA deployed by the company. Let us not forget you now have to deal with virtual keyboards and mice on most handheld devices.

I'm no stranger to cross platform development. I was there when the first major plunge happened. Some of you might be old enough to remember the “Zinc It!” book series. It covered the Zinc Application Framework on DOS, OS/2, Windows, and to some extent MAC. The reason I know about that series is I was the author. Zinc Software was a poorly managed company. The product was purchased by Wind River Systems and rolled into various products they offer. Recently, perhaps because of this trend, I have found Zinc is once again being sold as a product by a company. I haven't dug too deeply into it, but they claim to support desktop and embedded platforms.

There is also talk of an Open Source version as well.

Let's be honest. While some commercial product vendors will use the cross platform capabilities of the library, many inhouse developers will simply use the library on a single platform with an eye toward being able to migrate quickly if whims change. A lot of companies which had been staunchly (and stupidly) in the Windows desktop category are now starting to use Qt for Windows development. They are in the process of migrating everything to Qt so they can deploy Ubuntu, OpenSuSE, or some other Linux distribution on the desktop as soon as the bean counters realize just how much it costs to keep Microsoft around. Microsoft has been a victim of its own marketing now. When you actually total up all of the costs, it ends up being the most expensive platform per user of any computing platform, including IBM mainframes and OpenVMS midrange systems. A lot of companies have learned that the OpenVMS box which is still housing their data and some core applications is/was the cheapest per user solution they had even if they didn't like green screen applications.

1.5 Why Ubuntu?

If you have already read “The Minimum You Need to Know About Service Oriented Architecture”, then you aren't asking the above question. For those of you who skipped that book in the series, Ubuntu is quickly becoming the desktop Linux distribution of choice for most IT professionals and even quite a few corporations. Dell has even started preloading Ubuntu on machines for corporate customers. Yes, Lenovo is preloading OpenSuSE, but most other vendors are embracing Ubuntu. OpenSuSE has been tainted by a business deal between Novell and Microsoft which infuriated most of the various Unix/Linux factions and spawned a rewrite of the GPL (GNU Public License) which put Novell in a world of hurt.

We won't get too deep into arguments about which is technically better. I have used both in the past. I used to actually pay for the professional edition of SuSE before it got the silent “Open” added to the front of it. All code in this book will be written and tested on version 10.10 AMD 64bit KUbuntu. You should be able to adapt things for your own environment. I can warn you that many versions of OpenSuSE made it nearly impossible to get PostgreSQL running. It would eventually run, but you had to scour

the Internet looking for hints, snippets, and advice. Ubuntu made it an easy install and nearly automatic configuration. If you are a developer that doesn't want to be a Sys Admin and you don't like hacking kernel startup scripts, Ubuntu is the simpler choice.

Developers who want lots of development tools included with the initial installation tend to prefer using OpenSuSE. Corporations and their support staff tend to prefer Ubuntu. In either case, you don't notice a lot of difference unless you are willing to open up a terminal window and start keying in commands, or you need to install something different. Ubuntu uses the Synaptic Package Manager for installations while OpenSuSE uses YAST (Yet Another Setup Tool) for everything, including adding users, changing the system time, etc. You can load KDE or Gnome front ends under either distribution.

Corporations are preferring Ubuntu desktop distributions because the default desktop interface is based on Gnome. This is a clean, minimalist interface. Users who don't understand the inner workings of Linux will find themselves somewhat limited to surfing the Web, creating documents, checking email, and whatever application links were preloaded by the corporation. To get both the KDE and Gnome session interfaces, you need to navigate your way to the package manager after installation and install the KDE interface (KUBUNTU). KDE is a much more functional interface than Gnome. I will admit there are certain specific things which are much easier in Gnome than KDE, but most common things are far easier in KDE than Gnome. If you have both interfaces installed, you can simply log out and log back in changing your session to which ever interface is easier for what you need to do.

If you install both interfaces on Ubuntu though be prepared for a lot of things to either not work or work spastically. The Ubuntu crowd tests only one installed interface with each release. I have tried many times to make both KUbuntu and Ubuntu play nice together, and finally gave up. Every time you try to log a support issue you get told to wipe everything and install just Ubuntu.

Corporations are more than happy to stick their users with the Gnome interface because they want to restrict what people do on their desktop. How many billions have been lost to people installing stuff on their Windows desktop only to find out it had a virus or some other nasty security hole? A restricted interface keeps users from poking around and installing stuff. The user account can also be set up to not allow installations.

The other major selling point for Ubuntu is stability. Yes, many flavors of Linux and other PCbased operating systems claim stability, but when you ask them how they achieve it, you get a lot of buzzwords along with a song and dance. Ubuntu is generally a year behind the bleeding edge found in the source trunks. While they will pull in some patches and fixes which are newer, they are generally at least six months behind the last stable release of any package which tends to be four to six months behind the bleeding edge of the development trunk. The core developers for Ubuntu will tell you they release every six months so they are only six months behind. This is true only if you are measuring from the last “stable” release each package developer turned out.

OpenSuSE releases once every eight months. They don't put out an LTS release, but they don't have a spastic numbering system like Ubuntu. OpenSuSE 11 has a roadmap spanning several years and multiple .x releases. Ubuntu releases during the fourth and tenth month of every year. Their versions are number YEAR.MONTH.

In short, we are using Ubuntu because it plays the game the best and doesn't have a taint from the MicrosoftNovell deal and doesn't have YAST. That said, Ubuntu has about a five year window to fix what is wrong with it before it disappears from the market place like Corel Linux. For more information on that read, “Ubuntu's Original Sin” in the Ruminations chapter.

1.6 Why Qt?

To start with, there is an Open Source version which is regularly updated. It is the GUI library used for all KDE project development. Linux is gaining a lot of popularity and KDE is the major desktop. While Gnome gained a lot of corporate support because it is a bare interface which doesn't let the experimental end user get into too much trouble, for most developers and seasoned computer users, Gnome is much like rubbing sticks together to make fire.

While it can be correctly stated that Open Source projects let in far too many junior programmers with buggy code, that statement would be incorrect when it comes to the Qt library. There are commercial developers sifting the wheat from the chaff. This is not to say the library doesn't have bugs. What I'm saying is that there are literally thousands of developers working on various portions of KDE (and now the new Ubuntu Gnomelike interface based on Qt instead of Gtk+.) These developers report bugs as they find them and in many cases send along source changes to be incorporated into the main product trunk. Few commercial products can afford thousands of professional developers. By going Open Source with much of the library, Trolltech (now part of Nokia) has affectively added thousands of developers to their staff at little to no cost. The cross platform libraries from days of old didn't go this route. They tried to get by with a limited number of inhouse developers funded by astronomical license fees.

Let us not forget that Nokia has chosen Qt as its standard (basically by purchasing the product) and there is a lot of development going on for handheld applications in that company. More and more embedded systems developers are choosing to use Qt on their embedded project as a result of this. Ubuntu even announced that the main development library for its Gnomelike front end will now be Qt as well. While there are many Gtk+ diehards out there, the writing is on the wall. Qt managed to achieve a consensus of what should be provided on all platforms and the “benefits” of proprietary toolkits no longer exceed the “costs” of using them.

There is a lot of online documentation on Qt. Trolltech and Nokia have gone to great lengths to make all of Qt's documentation available online. Many other sites also host copies of it in case the primary site goes down. In addition, the documentation is included in the free QtAssistant document browser which is installed with most development packages. Much of the documentation is “expert friendly.” It works well if you are already a seasoned veteran and just need to look something up. It doesn't work well from the learning aspect.

I needed to develop the xpnsqt application to replace something I had written in Lotus Approach many years ago. I kept waiting and waiting for Lotus/IBM to have a divine visitation and port the Lotus SmartSuite to Linux. I guess all of the major deities have been busy for the past decade. Now that OpenOffice has made great strides the word processor and spreadsheet market simply wouldn't be profitable. Approach was one of those tools nobody used much. It did things well so you simply didn't remember what tool you used for development. The xpnsqt application took me 45 minutes to develop with Lotus Approach well over a decade ago without ever having used Approach before. The replacement weighed in around four days. Two and a half of those days were spent trying to use a new Qt database class one of the books was gaga about. That class simply wasn't ready to run with the big dogs.

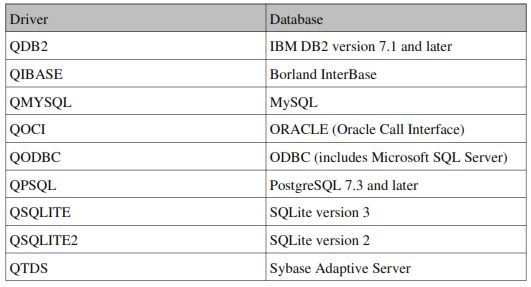

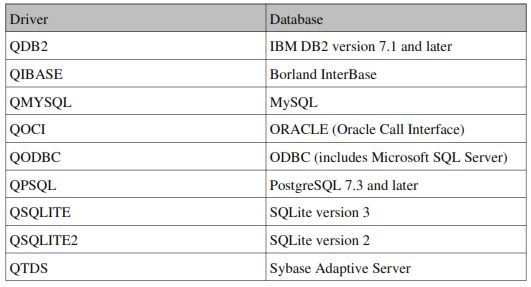

You have quite a few database choices when developing with Qt. The database classes are actually wrapper classes for various database plugins. Many of the plugins can be compiled into the library so they are statically linked with your application. Static linking provides an immense amount of data and application security over plugin use. For more on that topic see “plugin vs. Static Compile” in the Ruminations chapter. At the time of this writing Qt supports the following database plugins:

Table 1: Qt Database Drivers

Not all drivers are shipped with all versions of Qt. Some drivers only work on some platforms because the database only runs on some platforms. Other than an ODBC driver, I don't know how you would access Oracle RDB from within Qt. (Remember that Oracle and Oracle RDB are two completely different database products.) Interestingly enough, the OpenVMS community has been porting Qt to the OpenVMS platform for quite some time. Trolltech doesn't seem willing to adopt these ports, so the community has been working diligently at them. You can find some interesting information in this message thread:

http://groups.google.com/group/comp.os.vms/browse_thread/thread/4e1bacfc0f3358400c4ad8634cf9f0e?hl=en&lnk=gst&q=Qt#00c4ad8634cf9f0e

Qt comes (on most platforms) with its own version of SQLite. This is like the default MySQL for many Linux distributions, without the additional administration issues. It is an SQL interface wrapped around an indexed file system. (MySQL defaults to an indexed file system on most platforms. You have to actually force InnoDB at time of database creation to get a relational engine.) Since SQLite is much lighter than MySQL, it will let you create your indexed files pretty much where ever you happen to be. MySQL requires databases to be created where its startup script or system logical points (at least for the server version.) It is popular with PCminded developers because it allows them to gain the benefits of an SQL interface without having to learn how to actually design and develop around a database. (Those of you looking for more information about logicals should read ISBN13 9780977086634.)

In theory, the wrapper classes protect you from the quirks found in each database. I say in theory because the higher level wrappers don't work cleanly yet, so you end up writing quite a bit of SQL and using query functions. Each target database has some minor differences in their query languages and connection methods. Why hasn't this become a major issue? Quite simply, very few applications ever get ported between database products. The primary development effort with Qt has been to use it writing KDE (the K Desktop Environment for Unix/Linux), many text editors, and a few IDEs. A major secondary market has been to develop applications for Nokia phones and other handheld devices. Very few applications are ported between the two environments, but that is starting to change. Most of those which are ported can get by with the limited capabilities of SQLite.

These days, many companies will start development with a cross platform tool knowing they will have to port to another platform in the future, even if they don't want to port. How is that possible? Take a look at the DOS market. There were a rash of DOS specific GUI libraries during DOS's heyday. Then came Windows (GUI DOS), Windows for Work Groups (even more GUI DOS), Windows 3.x (still more GUI DOS), Windows 95 (GUIer DOS), Windows 98 (DOS locked in the dungeon still doing the bulk of the work for the GUI), Windows NT, Windows XP, and most recently Windows Vista. (Yes, I skipped OS/2, a better DOS than DOS and a better Windows than Windows.) Every three to ten years, the underlying environment changed. A cross platform library can minimize the impact to your application. In