Thirty years ago, the French philosopher and literary theorist Jean-François Lyotard published a prescient “report on knowledge” called The Postmodern Condition. Originally commissioned by the Conseil des Universités of the government of Quebec, the report was an investigation of “the status of knowledge” in “computerized societies” (3). Lyotard's working hypothesis was that the nature of knowledge—how we know, what we know, how knowledge is communicated, what knowledge is communicated, and, finally, who “we” as knowers are—had changed in light of the new technological, social, and economic transformations that have ushered in the post-industrial age, what he calls, in short, postmodernism. Much more than just a periodizing term, postmodernism, for Lyotard, bespeaks a new cultural-economic reality as well as a condition in which “grand narratives” or “meta-narratives” no longer hold sway: the progress of science, the liberation of humanity, the spread of Enlightenment and rationality, and so forth are meta-narratives that have lost their cogency. This itself is not an original observation; after all, Nietzsche, Benjamin, Adorno, Horkheimer, Foucault, and others have variously shown where the fully enlightened world ends up. What sets Lyotard apart is his focus on how knowledge has been transformed into many “small” (and even competing and contradictory) narratives and how scientific knowledge in particular has become transformed into “bits of information” with the rise of cybernetics, informatics, information storage and databanks, and telematics, rendering knowledge something to be produced in order to be sold, managed, controlled, and even fought over (3-5). In these computerized societies (remember this is 1979: the web didn't exist and the first desktop computers were just being introduced), the risk, he claims, is the dystopian prospect of a global monopoly of information maintained and secured by private companies or nation-states (4-5). Needless to say, Google was founded about twenty years later, although ostensibly with a somewhat different mission: to make the world's information universally accessible and useful.

Lyotard articulated one of the most significant contemporary struggles—namely, the proprietary control of information technologies, access and operating systems, search and retrieval technologies, and, of course, content, on the one hand, and the “open source” and “creative commons” movement on the other. Beyond that, he drew attention to several other changes that have affected what he considered to be the state of knowledge in postmodernism: first, the dissolution of the social bond and the disaggregation of the individual or the self (15); second, the interrogation of the university as the traditional legitimator of knowledge; and third, the idea that knowledge in this new era can only be legitimated by “little narratives” based on what he calls “paralogy” (a term that refers to paradox, tension, instability and the capacity to produce “new moves” in ever-shifting “language games”). While I will not evaluate Lyotard's argument extensively here, I do think it's worth underscoring these points because, perhaps surprisingly, they apply just as much to 2009 as they did to 1979. After all, the social bond today is fundamentally realized through interactions with distributed and equally abstracted networks such as email, IM, text messaging, and Facebook that are accessed through computers, mobile phones, and other devices connected to “the grid.” It has become impossible to truly “de-link” from these social networks and networking technologies, as the self exists “in a fabric of relations that is now more complex and mobile than ever before . . . located at 'nodal points' of specific communication circuits. . . . Or better [Lyotard says] one is always located at a post through which various kinds of messages pass” (15).

Lyotard’s discussion of the role of the university in postmodernism has become increasingly relevant over the last three decades. The university is no longer the sole, and perhaps not even the privileged, site of knowledge production, curation, stewardship, and storage. Traditionally an exclusive, walled-in institution, the university legitimates knowledge while reproducing rules of admission to and control over discourses. Not just anyone can speak (one must first be sanctioned through lengthy and decidedly hierarchical processes of authorization), and the knowledge that is transmitted is primarily circulated within and restricted to relatively closed communities of knowers (Foucault calls them “fellowships of discourse”). True statements are codified, repeated, and circulated through various kinds of disciplinary and institutional forms of control that legitimize what a “true statement” is within a given discipline: before a statement can even be admitted to debate, it must first be, as Foucault argued repeatedly, “within the true” (224). For an idea to fall “within the true,” it must not only cite the normative truths of a given discipline but—and this is the crux of this essay—it must look “within the true” in terms of its methodology, medium, and mode of dissemination. Research articles can't look like Wikipedia entries; monographs can't be exhibitions curated in Second Life. At least not yet . . .

Thankfully, universities are far from static or monolithic institutions and, as Lyotard and others point out, there is plenty of room for an imaginative reinvention of the university, of disciplinary structures, and research and pedagogical practices. This imaginative investment lies in the ability to “make a new move” or change the rules of the game by, perhaps, arranging or curating data in new ways, thereby developing new constellations of thought that “disturb the order of reason” (61). The next part of this article will address precisely what this might mean for the work of the Digital Humanities today.

For now, I want to articulate the third and final point that I adopt from Lyotard, namely the problem of legitimation. Wikipedia can stand as a synechdoche for the problems of knowledge legitimation: who can create knowledge, who monitors it, who authorizes it, who disseminates it, and whom does it influence and to what effect? Legitimation is always, of course, connected to power, whether the power of a legal system, a government, a military, a board of directors, an information management system, the tenure and promotion system, the book publishing industry, or any oversight agency. Not only are modes of discourse (utterances, statements, arguments) legitimized by the standards established by a given discipline, by its practitioners, and by its history, so are the media in which these discursive statements are formulated, articulated, and disseminated. The normative medium for conveying humanities knowledge (certainly in core disciplines such as literature and literary studies, history, and art history, but also philosophy and the humanistic social sciences, as they have been codified since the nineteenth century) is print: the printed page—linear, paginated prose supported by a bibliographic apparatus—is the naturalized medium, and the knowledge it conveys is legitimated by the processes of peer review, publication, and citation. This is not necessarily a problem—it certainly works, makes sense, and is authoritative. But we should also remember that this medium wasn't always used and won't always be: think, for example, of rhetoric and philosophy, grounded in oral and performative traditions, or of “practice-based” disciplines such as dance, design, film, and music, in which the intellectual product is not a print artifact. In much the same vein, Digital Humanities denaturalizes print, awakening us to the importance of what N. Katherine Hayles calls “media-specific analysis” in order to focus attention on the technologies of inscription, the material support, the system of writing down (“aufschreiben,” as Friedrich Kittler would say), the modes of navigation (whether turning pages or clicking icons), and the forms of authorship and creativity (not only of content but also of typography, page layout, and design). In this watershed moment of transformation, awareness of media-specificity is nearly inescapable.

Far from suggesting that new technologies are better or that they will save us (or resuscitate our “dying disciplines” or "struggling universities"), Lyotard concludes his report with a call for the public to have “free access to the memory and data banks” (67). He grounds the argument dialectically, as technologies have the potential to do many things at once: to exercise exclusionary control over information as well as to democratize information by opening up access and use. This, I would argue, is the persistent dialectic of any technology, ranging from communications technologies (print, radio, telephone, television, and the web) to technologies of mobility and exchange (railways, highways, and the Internet). These technologies of networking and connection do not necessarily bring about the ever-greater liberation of humankind, as Nicholas Negroponte asserted in his wildly optimistic book Being Digital (1995), for they always have a dialectical underbelly: mobile phones, social networking technologies, and perhaps even the hundred-dollar computer will not only be used to enhance education, spread democracy, and enable global communication but will also likely be used to perpetrate violence and even orchestrate genocide in much the same way that the radio and the railway did in the last century (despite the belief that both would somehow liberate humanity and join us all together in a happy, interconnected world that never existed before [Presner]). Indeed, this is why any discussion about technology cannot be separated from one about power, legitimacy, and authority.

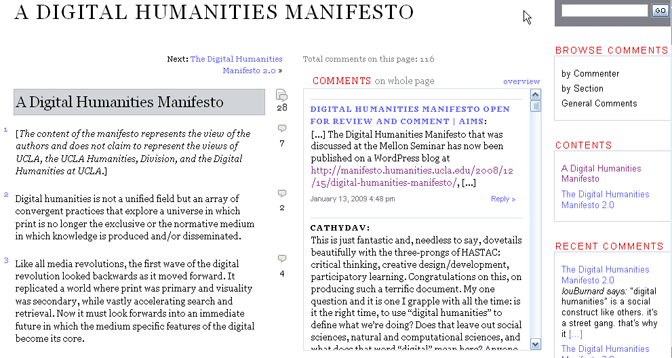

Rather than making predictions, I would like to turn to the state of knowledge in the humanities in 2009. My relatively recent arrival in this discussion, after centuries of thought on this topic, constitutes, in fact, a unique vantage point from which I can begin: today, the changes brought about by new communication technologies—including but hardly limited to web-based media forms, locative technologies, digital archives, social networking, mixed realities, and now cloud computing—are so proximate and so sweeping in scope and significance that they may appropriately be compared to the print revolution.[] But our contemporary changes are happening on a very rapid timescale, taking place over months and years rather than decades and centuries. Because of the rapidity of these developments, the intellectual tools, methodologies, disciplinary practices, and institutional structures have just started to emerge for responding to, engaging with, and interpreting the massive social, cultural, economic, and educational transformations happening all around us. Digital Humanities explores a universe in which print is no longer the exclusive or normative medium in which knowledge is produced and/or disseminated; instead, print finds itself absorbed into new, multimedia configurations, alongside other digital tools, techniques, and media that have profoundly altered the production and dissemination of knowledge in the Arts, Humanities, and Social Sciences (see, for example, the Digital Humanities Manifesto, Figs. 1 and 2).

I consider “Digital Humanities” to be an umbrella term for a wide array of practices for creating, applying, interpreting, interrogating, and hacking both new and old information technologies. These practices—whether conservative, subversive, or somewhere in between—are not limited to conventional humanities departments and disciplines, but affect every humanistic field at the university and transform the ways in which humanistic knowledge reaches and engages with communities outside the university. Digital Humanities projects are, by definition, collaborative, engaging humanists, technologists, librarians, social scientists, artists, architects, information scientists, and computer scientists in conceptualizing and solving problems, which often tend to be high-impact, socially-engaged, and of broad scope and duration. At the same time, Digital Humanities is an outgrowth and expansion of the traditional scope of the humanities, not a replacement for or rejection of humanistic inquiry. I firmly believe that the role of the humanist is more critical at this historic moment than ever before, as our cultural legacy as a species migrates to digital formats and our relation to knowledge, cultural material, technology, and society is radically re-conceptualized. As Jeffrey Schnapp and I articulated in various instantiations of the Digital Humanities Manifesto, it is essential that humanists assert and insert themselves into the twenty-first century cultural wars (which are largely being defined, fought, and won by corporate interests). Why, for example, were humanists, foundations, and universities conspicuously—even scandalously—silent when Google won its book search lawsuit and effectively won the right to transfer copyrights of orphaned books to itself? Why were they silent when the likes of Sony and Disney essentially engineered the Digital Millennium Copyright Act, radically restricting intellectual property, copyright, and sharing? The Manifesto is a call to humanists for a much deeper engagement with digital culture production, dissemination, access, and ownership. If new technologies are dominated and controlled by corporate and entertainment interests, how will our cultural legacy be rendered in new media formats? By whom and for whom? These are questions that humanists must urgently ask and answer.

Like all manifestos, especially those that came out of the European avant-garde in the early twentieth century, the Digital Humanities Manifesto is bold in its claims, fiery in its language, and utopian in its vision. It is not a unified treatise or a systematic analysis of the state of the humanities; rather, it is a call to action and a provocation that has sought to perform the kind of debate and transformation for which it advocates. As a participatory document circulated throughout the blogosphere, the three major iterations of the Digital Humanities Manifesto are available in many forums online: Versions 1.0 and 2.0 exist primarily as Commentpress blogs, and Version 3.0 is an illustrated, print-ready PDF file, which, as of this writing, has been translated into four languages and widely cited, cribbed, remixed, and republished on numerous blogs. The rationale for using Commentpress was to make some of the more incendiary ideas in the Manifesto available for immediate public scrutiny and debate, something that is facilitated by the blogging engine's paragraph-by-paragraph commenting feature, resulting in a richly interlinked authoring/commenting environment. In a Talmudic vein, the comments and critiques quickly overtook the original “text,” creating a web of commentary and a multiplication of voices and authors. By Versions 2.0 and 3.0, the authorship of the Manifesto had extended in multiple directions, with substantial portions authored by scholars in the field, students, and the general public. Moreover, since the Manifesto was widely distributed in the blogosphere and on various Digital Humanities listservs, it instantiated one of the key things that it called for: participatory humanities scholarship in the expanded public sphere.

http://manifesto.humanities.ucla.edu/2008/12/15/digital-humanities-manifesto/

PDF Version 3.0: http://digitalhumanities.ucla.edu/images/stories/mellon_seminar_readings/manifesto%2020.pdf

Reflecting on the Manifesto nine months later, I believe it is not only a call for Hhmanists to be deeply engaged with every facet of the most recent information revolution (Robert Darnton points out that we are living through the beginnings of the fourth Information Age, not the first), but also a plea for humanists to guide the reshaping of the university—curricula, departmental and disciplinary structures, library and laboratory spaces, the relationship between the university and the greater community—in creative ways that facilitate the responsible production, curation, and dissemination of knowledge in the global cultural and social landscapes of the twenty-first century. Far from providing "right answers," the Manifesto is an attempt to examine the explanatory power, relevance, and cogency of established organizations of knowledge that were inherited from the nineteenth and twentieth centuries and to imagine creative possibilities and futures that build on long-standing humanistic traditions. It is not a call to throw the proverbial baby out with the bathwater, but rather to interrogate disciplinary and institutional structures, the media of knowledge production and modes of meaning making and to take seriously the challenges and possibilities set forth by the advent of the fourth Information Age. The Manifesto argues that the work of the humanities is absolutely critical and, in fact, more necessary than ever for developing thoughtful responses, purposeful interpretations, trenchant critiques, and creative alternatives—and that this work cannot be done while locked into restrictive disciplinary and institutional structures, singular media forms, and conventional expectations about the purview, function, and work of the humanities.

The Manifesto in no way declares the humanities "dead" or placed in peril by new technologies; rather, it argues, the humanities are more necessary and relevant today than perhaps any other time in history. It categorically rejects Stanley Fish's lament of “the last professor” and the work of his students, such as Frank Donoghue, which claims that the humanities will soon die a quiet death. To be sure, we must be vigilant of the “corporate university” and distinguish our Digital Humanities programs from the “digital diploma mills” (Noble), but we must also demonstrate that the central work of the humanities—creation, interpretation, critique, comparative analysis, historical and cultural contextualization—is absolutely essential as our cultural forms migrate to digital formats and new cultural forms are produced that are “natively digital.” Fish and Donoghue make an assessment of the end of the humanities based on the fact that its research culture, curricular programs, departmental structures, tenure and promotion standards, and, most of all, publishing models are based on paradigms that are quickly eroding. Indeed, they are not wrong about their assessment, which is quite convincing when we start from the crisis of the present and look backwards: academic books in the humanities barely sell enough copies to cover the cost of their production, and the job market—as the 2009 MLA report attests—betrays the worst year on record for PhDs hoping to land tenure-track positions in English or Foreign Literature departments (Jaschik). What this evidences is certainly a crisis, but the way out is neither to surrender nor to attempt to replicate the institutional structures, research problems, disciplinary practices, and media methodologies of the past; rather, it may be to recognize the liberating—and profoundly unsettling—possibilities afforded by the imminent disappearance of one paradigm and the emergence of another. The humanities, rather than disappear as Fish predicts, can instead guide this paradigm shift by shaping the look of learning and knowledge in this new world.

Instead of facilely dismissing either the critical work of the humanities or the potentialities afforded by new technologies, we must be engaged with the broad horizon of possibilities for building upon excellence in the humanities while also transforming our research culture, our curriculum, our departmental and disciplinary structures, our tenure and promotion standards, and, most of all, the media and format of our scholarly publications. While new technologies may threaten to overwhelm traditional approaches to knowledge and may, in fact, displace certain disciplines, scholarly fields, and pedagogical practices, they can also revitalize humanistic traditions by allowing us to ask questions that weren't previously possible. We see this, for example, in fields such as classics and archaeology, which have widely embraced digital tools such as Geographic Information Systems (GIS) and 3D modeling to advance their research in significant and unexpected ways. We also see it in text-based fields like history and literature, which have begun to draw on new authoring, data-mining, and text-analysis tools for dissecting complex corpora on a scale and with a level of precision never before possible.

While the first wave of Digital Humanities scholarship in the late 1990s and early 2000s tended to focus on large-scale digitization projects and the establishment of technological infrastructure, the current second wave of Digital Humanities—what can be called “Digital Humanities 2.0”—is deeply generative, creating the environments and tools for producing, curating, and interacting with knowledge that is “born digital” and lives in various digital contexts. While the first wave of Digital Humanities concentrated, perhaps somewhat narrowly, on text analysis (such as classification systems, mark-up, text encoding, and scholarly editing) within established disciplines, Digital Humanities 2.0 introduces entirely new disciplinary paradigms, convergent fields, hybrid methodologies, and even new publication models that are often not derived from or limited to print culture.

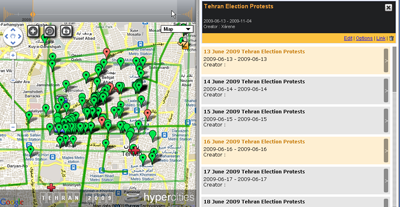

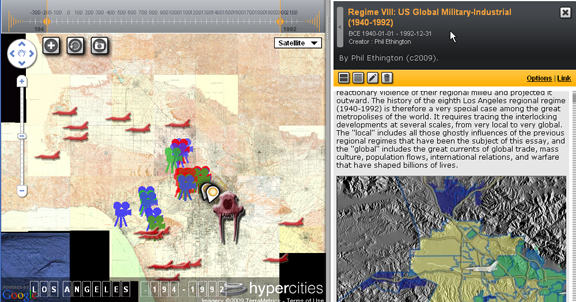

Let me provide a couple of examples based on my own work on a web-based research, educational, and publishing project called HyperCities (http://www.hypercities.com). Developed through collaboration between UCLA and USC, HyperCities is a digital media platform for exploring, learning about, and interacting with the layered histories of city spaces such as Berlin, Rome, New York, Los Angeles, and Tehran. It brings together scholars from fields such as geography, history, literary and cultural studies, architecture and urban planning, and classics to investigate the fundamental idea that all histories “take place” somewhere and sometime and that these histories become more meaningful and valuable when they interact with other histories in a cumulative, ever-expanding, and interactive platform. Developed using Google's Map and Earth APIs, HyperCities features research and teaching projects that bring together the analytic tools of GIS, the geo-markup language KML, and traditional methods of humanistic inquiry.[] The central theme is geo-temporal analysis and argumentation, an endeavor that cuts across a multitude of disciplines and relies on new forms of visual, cartographic, and time/space-based narrative strategies. Just as the turning of the page carries the reader forward in a traditionally conceived academic monograph, so, too, the visual elements, spatial layouts, and kinetic guideposts guide the “reader” through the argument situated within a multi-dimensional, virtual cartographic space. HyperCities currently features rich content on ten world cities, including more than two hundred geo-referenced historical maps, hundreds of user-generated maps, and thousands of curated collections and media objects created by users in the academy and general public.

As a Digital Humanities 2.0 project, HyperCities is a participatory platform that features collections that pull together digital resources via network links from countless distributed databases. Far from a single container or meta-repository, HyperCities is the connective tissue for a multiplicity of digital mapping projects and archival resources that users curate, present, and publish. What they all have in common is geo-temporal argumentation. For example, the digital curation project “2009-10 Election Protests in Iran” (see Fig. 3) meticulously documents, often minute-by-minute and block-by-block, the sites where protests emerged in the streets of Tehran and other cities following the elections in mid-June. With more than one thousand media objects (primarily geo-referenced YouTube videos, Twitter feeds, and Flickr photographs), the project is possibly the largest single digital collection to trace the history of the protests and their violent suppression. It is a digital curation project that adds significant value to these individual and dispersed media objects by bringing them together in an intuitive, cumulative and open-ended geo-temporal environment that fosters analytic comparisons through diachronic and synchronic presentations of spatialized data. In addition to organizing, presenting, and analyzing the media objects, the creator of the project, Xarene Eskandar, is also working on qualitative analyses of the data (such as mappings of anxiety and shame) as well as investigating how media slogans used in the protests were aimed at many different audiences, especially Western ones.

YouTube video on this collection: http://www.youtube.com/watch?v=qkEN02dGOlU

Permalink to this collection in HyperCities: http://hypercities.ats.ucla.edu/#collections/13549

Another project, “Ghost Metropolis” by Philip Ethington (see Fig. 4), is a digital companion to his forthcoming book on the history of Los Angeles, which starts in 13,000 BCE and extends through the present. Ethington demonstrates how history, experienced with complex visual and cartographic layers, “takes” and “makes” place, transforming the urban, cultural, and social environment as various “regional regimes” leave their impression on the landscape of the global city of Los Angeles. The scholarship of this project can be fully appreciated only in a hypermedia environment that allows a user to move seamlessly between global and local history, overlaying datasets, narratives, cartographies, and other visual assets in a richly interactive space. Significantly, this project—a scholarly publication in its own right—can be viewed side-by-side with and even “on top of” other projects that address cultural and social aspects of the same layered landscape, such as the video documentaries created in 2008-09 by immigrant youth living in Los Angeles' historic Filipinotown. The beauty of this approach is that scholarly research intersects with and is enhanced by community memories and archiving projects that tend, at least traditionally, to exist in isolation from one another.

YouTube video on this collection: http://www.youtube.com/watch?v=iogP_YvKs1w

Permalink to this collection in HyperCities: http://hypercities.ats.ucla.edu/#collections/15165

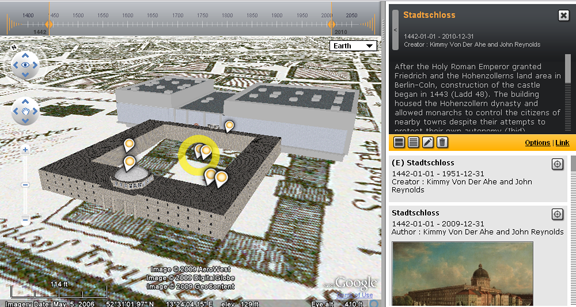

HyperCities is also used for pedagogical purposes to help students visualize and interact with the complex layers of city spaces. Student projects exist side-by-side with scholarly research and community collections and can be seen and evaluated by peers. These projects, such as those created by my students for a General Education course at UCLA, “Berlin: Modern Metropolis,” demonstrate a high degree of skill in articulating a multi-dimensional argument in a hypermedia environment and bring together a wide range of media resources ranging from 2D maps and 3D re-creations of historical buildings to photographs, videos, and text documents (see Fig. 5). What all of these projects have in common is an approach to knowledge production that underscores the distributed dimension of digital scholarship (by dint of the fact that all of the projects make use of digital resources from multiple archives joined together by network links), its interdisciplinary, hypermedia approach to argumentation, and its open-ended, participatory approach to interacting with and even extending and/or remixing media objects. Moreover, with the exception of the last, all of these HyperCities projects are works-in-progress, something that underscores the processual, iterative, and exploratory nature of Digital Humanities scholarship.