A portal (or Web portal) presents information from diverse sources in a unified way. A Web site that offers a broad array of resources and services, such as e-mail, forums, search engines, online shopping are referred to as portal. The first portals grow out of online services, such as AOL, and provided access to the Web, but by now most of the traditional search engines have transformed themselves into Web portals to attract and keep a larger audience. Apart from the basic search engine feature, these portals often offer services such as e-mail, news, stock prices, information, and entertainment.

Portals provide a way for enterprises, research and other communities to generate a consistent look and feel with access control and procedures for multiple applications, which otherwise would have been different entities altogether. In a research environment a portal integrates online scientific services into single Web environment which can be accessed and managed from a standard Web browser. The most remarkable benefit of portals is that they simplify the interaction of users with distributed systems and with each other, because a single tool – the browser – and a standard and widely accepted network protocol – HTTP – can be used through all communications.

After the proliferation of Web browsers in the mid-1990s many companies tried to build or acquire a portal, to have a piece of the Internet market. The Web portal gained special attention because it was, for many users, the starting point of their Web browser. Similarly, but a bit later, research communities recognized the value of Web portals in integrating various services into coherent, customizable environments. Research collaborations began developing portals in the late 1990. These environments can be broadly categorized as horizontal portals, which cover many areas, and vertical portals, which are focused on one functional area. Horizontal research portals often provide services that are independent from any scientific discipline and represent generic functionalities that are common across disciplines. Vertical portals target specific group of researchers that are involved in the same experiment or work within the same scientific field.

Portals for distributed science

In the simplest form a scientific portal is a collection of links to external Web pages and Web services that are scattered on the Internet and aimed to serve scientists with similar interest. These portals often include search engines that are customized for the interest of the user community, e.g. for publications, job positions, news from a scientific domain. Various research communities, ranging from mathematics to art and humanities, all have their own portals, sometimes even multiple portals localized for different geographical regions and languages.

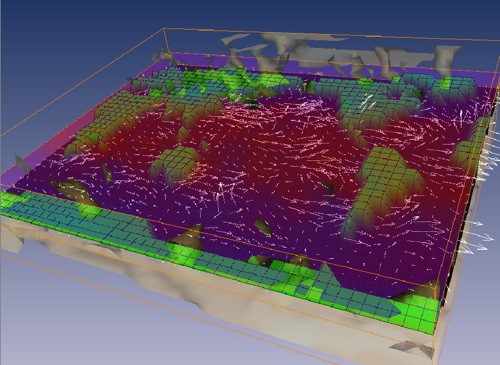

For researchers who perform massive simulations on distributed computing systems, portals mean Web environments that make computing resources and services accessible via Web browsers. Such portals are typically referred to as Grid portals, or science gateways. Grid portals are Web interfaces that are integrated with PC, cluster or supercomputer based computing resources. These environments very often include high level services that are not included in the underlying infrastructure, they are implemented on the portal server instead. Such services can be brokers, load balancers, data replica services, data mirroring and indexing components. All these services together with the front-end portal provide an integrated solution that enables e-scientists to deal with various aspects of data-intensive research. Usage scenarios in such portals often involve the generation and storing of research data, analysis of massive datasets, drawing scientific conclusions and sharing all these entities with colleagues or with the general public. Scientists of these portals tend to organize their frequently used steps of simulations into reusable components, called workflows. Workflows provide repeatable, traceable experiments and improve both the productivity and quality of research.

Hardware resources, software services, applications and workflows that are made accessible through Grid portals are typically provided by multiple independent organizations and are managed by different administrators. Portals connect to these distributed entities in a service oriented fashion, namely through well defined interfaces that expose the functionality of each shared component. For the sake of scalability and fault tolerance these services are accessed by the portal through some catalogues. A typical difficulty of portal design is how to integrate the content from the dynamic services of the catalog into a user friendly view that is ergonomic, provides coherent information, and at the same time flexible and easily customizable for different user preferences.

In the early years of Grid computing the portal systems were implemented using various different programming approaches and languages. Despite Grid solutions have much simplified since those early years, developers of science gateways must still know relatively high number of technologies. Portal operators must closely follow the evolution of Grid middleware services, because updates on the portal are required when the Grid middleware is changed. While enterprise portals also demand regular updates due to changes in the back-end systems, the technological evolution in Grid computing is more rapid than in business environments. As grid portals shield users away from middleware evolution, they are very often the only way for scientists to stay connected with Grids over longer period of time.

There are a few other technical difficulties that are specific to Grid portals. The issue of how to map the security model of Grid systems to the security model of the “Web” is one of these. While Web portals identify and authenticate users with account-password pairs, Grids and other sorts of distributed computing environments use certificates. Certificates enable users to authenticate only once in a distributed system and perform complex operations without being asked for account details over and over again (e.g. in every stage of the orchestration of a workflow). By certificates the users can delegate their access rights to workflow managers, brokers, catalogs and other services that perform activities on their behalf. Grid portals typically translate username-password pairs to certificates by either through certificate repositories (such as MyProxy), or by importing certificates from Web browsers.

From the technological perspective a portal is a dynamic Web page that consists of pluggable modules, called portlets. These portlets run in a portlet container. The container performs basic functionalities such as management of system resources and authenticating users, while portlets generate the actual Web interface. Portal developers realized that reusability of portlets is a key to the customizability of portals, and the interoperability of portlets across different container platforms in an important step towards this. Around 2001 the Java Specification Request (JSR) 168 emerged as a standard that allows portal developers, administrators and consumers to integrate standards-based portals and portlets across a variety of portal containers. Most of current science gateways are built from JSR-168 compliant portlets and provide easily customizable and reusable solutions for various purposes.

The usefulness of Grid portal technologies for computational science has been established by the number of portals being developed in Europe, the United States and Asia. In Europe the most relevant portal developer consortiums have gathered around the Enabling Grids for E-sciencE project (EGEE) and its national Grid counterparts. Some of these portals provide tools that are independent from scientific disciplines, others emphasize solutions that specific communities are familiar with and can utilize efficiently.

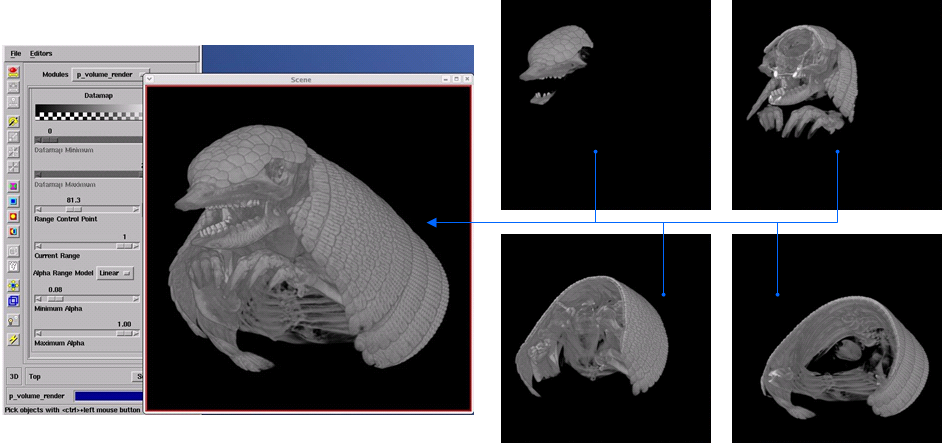

P-GRADE Portal provides facilities to create and execute computational simulations on cluster based Grids. Various user communities of the EGEE Grid and several European national Grids apply P-GRADE Portal as a graphical front-end to manage workflow applications on their infrastructures. While P-GRADE Portal is primarily a generic environment, it can be customized to any scientific domain by generating application specific portals from it that grants access only to pre-defined, domain specific workflows and simulations.

The NGS Applications Repository is an open access portal used to describe and list applications and their associated artefacts that are available on the National Grid Service (NGS) of the UK. Applications hosted by the repository are described using middleware agnostic documents, which can be searched for by categories of interest. The repository currently holds over 50 applications from various fields such as bioinformatics, engineering, chemistry, astrophysics or image analysis.

The concept of content aggregation seems to still gain momentum and portal solution will likely continue to evolve significantly over the next few years. The Gartner Group recently predicted to expand on the Business Mashups concept of delivering a variety of information, tools, applications and access points through a single mechanism. Mashups are Web applications that combine data or functionality from two or more sources into a single integrated application. The term mashup implies easy, fast integration, frequently done by access to open programming interfaces and data sources to produce results that were not the original reason for producing the raw source data. An example of a mashup is the use of cartographic data from Google Maps to add location information to real estate data, thereby creating a new and distinct Web service that was not originally provided by either source.

Programmers of Grid and high performance computing portals still often find it hard to bridge between user friendly Web interfaces and low-level services of Grid middleware. Errors and faults sent back from the Grid are often difficult to interpret and deal with automatically, meanwhile it is inevitable that easy to use and autonomous portals are important tools to attract larger user communities to Grids. Grid portals will definitely improve in the near future in this respect.

M. Thomas, J Burruss, L Cinquini, G Fox, D. Gannon, I. Glilbert, G. von Laszewski, K. Jackson, D. Middleton, R. Moore, M. Pierce, B. Plale, A. Rajasekar, R. Regno, E. Roberts, D. Schissel, A. Seth, and W. Schroeder. Grid Portal Architectures for Scientific Applications. Journal of Physics, 16, pp 596-600. 2005.

“Web portal” entry in Wikipedia: http://en.wikipedia.org/wiki/Web_portal, last accessed 06/06/2009

Enabling Grids for E-sciencE project (EGEE): http://www.eu-egee.org/

P. Kacsuk and G. Sipos: Multi-Grid, Multi-User Workflows in the P-GRADE Portal Journal of Grid Computing, Vol. 3, No. 3-4, Springer Publishers, pp. 221-238, 2005.

NGS Job Submission Portal: https://portal.ngs.ac.uk/, last accessed: 06/06/2009

“Mashup” entry in Wikipedia: http://en.wikipedia.org/wiki/Mashup_(web_application_hybrid), last accessed 06/06/2009