At this point, the reader may have already guessed that the answer is yes if the transition matrix is a regular Markov chain. We try to illustrate with the following example from the section called “Markov Chains”.

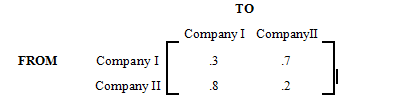

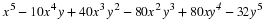

A small town is served by two telephone companies, Mama Bell and Papa Bell. Due to their aggressive sales tactics, each month 40% of Mama Bell customers switch to Papa Bell, that is, the other 60% stay with Mama Bell. On the other hand, 30% of the Papa Bell customers switch to Mama Bell. The transition matrix is given below.

If the initial market share for Mama Bell is 20% and for Papa Bell 80%, we'd like to know the long term market share for each company.

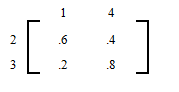

Let matrix

T denote the transition matrix for this Markov chain, and

M denote the matrix that represents the initial market share. Then

T and

M are as follows:

and

and

Since each month the towns people switch according to the transition matrix

T, after one month the distribution for each company is as follows:

After two months, the market share for each company is

After three months the distribution is

After four months the market share is

After 30 months the market share is

.

.

The market share after 30 months has stabilized to

.

.

This means that

Once the market share reaches an equilibrium state, it stays the same, that is,

.

.

This helps us answer the next question.