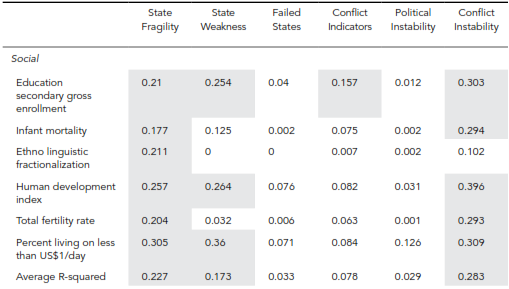

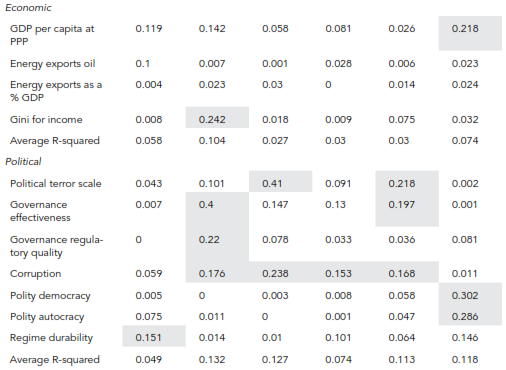

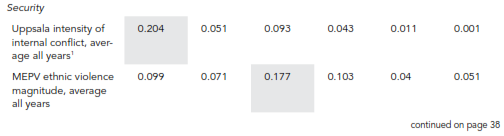

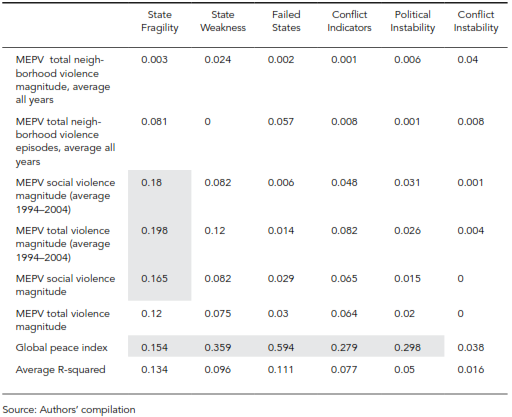

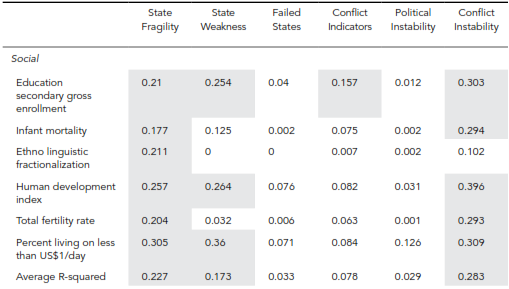

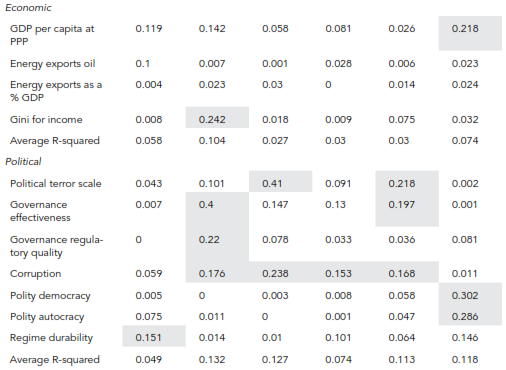

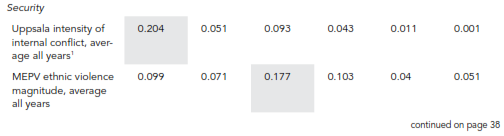

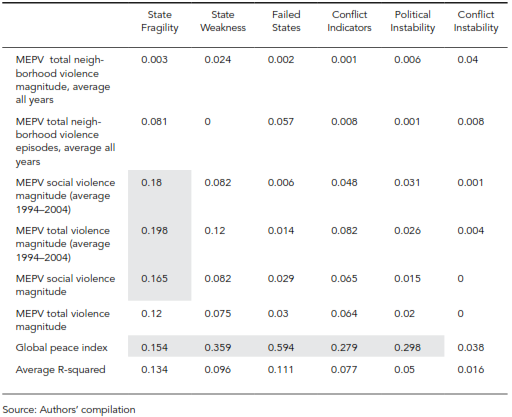

Table 7. Fragile States and Development Indicators

1 The onset of conflict is a discrete event; we averaged this variable across time to produce a longer-term conflict propensity, rather than simply relying on the most recent year. Theoretically, a country could have had conflict for many years excepting the most recent one. In other instances we looked, somewhat arbitrarily, at a block of eleven years (1994-2004).

Note: MEPF security measures are the Major Episodes of Political Violence series of the George Mason Center for Systemic Peace.

Note: Shared entries in the table represent adjusted R-squared values of 0.15 or higher.

to which the Maryland and EIU measures stand out from the other four and each other. He Maryland CI, clearly emphasizes a smaller subset of the very most vulnerable, and the EIU Political Instability Index somewhat surprised us with the extent to which the countries it rates considerably more vulnerable than other indices rate them exhibit economic and especially financial vulnerability-not what we would consider vulnerability to destabilizing conflict. The Fund for Peace measure emerges in this analysis to be more attentive than others to strong and potentially repressive states, or at least to those exhibiting conflict ostensibly by news feed (this measure is the least transparent of all).

We took a bit of a digression with respect to comparison across countries and considered what the spectrum of vulnerability might show us in relationship to other variables and across time. We found a strong, curvilinear relationship with GDP per capita and an interesting connection between dependence on energy exports and membership in the fragile set of the spectrum.

The fourth and final approach to comparison moved us into a much more extensive consideration of specific variables related to vulnerability and their correlations with the six indices.

We looked at the variables once again in the four standard categories. This analysis was productive and yielded these findings:

-

. The George Mason State Fragility Index relates most strongly to social variables, especially poverty and human development, and to internal conflict. It gives some attention to regime durability but in general does not relate strongly to economic or political variables.

-

. Although the overall highest correlations are with social and mostly human development variables, and not with social divisions, the Brookings Index of State Weakness has probably the most balanced coverage across all categories, consistent with its transparent identification with standard variables in each.

-

. The Fund for Peace's Failed State Index, the least transparent, clearly taps political terror and the content of the Global Peace Index-domestic and international violence-very strongly. It also picks up corruption fairly strongly. But, contrary to some measures of fragility, it does not relate to social variables. Because it uses news feeds, generally sensitive to events rather than levels of variables, this should perhaps not surprise us. Overall, the measure may be influenced fairly heavily by state delegitimization.

-

. The Carleton University Fragile States Index is generally balanced, like Brookings, but rather surprisingly its correlations with specific measures are not very strong, the Global Peace Index being an exception.

-

. The Economist Intelligence Unit's Political Instability Index reflects primarily political variables and the Global Peace Index in the security category. We have not included the immediate economic condition variables, such as unemployment or the most recent year's GDP growth, that might tie it to the economic category; these are important to its structure.

-

. The University of Maryland's Conflict Instability Ledger is most strongly related to the social variables: its average correlation with the variables in that category is the highest average correlation of all indices across all categories. Secondarily, it relates to the political category, notably to the Polity variables of democracy and autocracy. As noted in other analysis, it does not link directly to security variables, either existing or recent conflict.

One of the somewhat surprising overall conclusions of analysis with this fourth approach is the differentiation between the George Mason and Brookings measures of fragility from those of the Fund for Peace and even the Carleton measure.

Although this analysis can considerably help us in understanding the similarities and differences of conflict vulnerability indices, it cannot and does not attempt to identify the intrinsically or methodologically 'best' measure or measures. It does, however, allow those who use these findings to better understand the ways in which the principal validity constructs of different projects affect the ways in which lists of vulnerable states are generated and how some apparent discrepancies, such as the ranking of Tanzania in the CIL project, might occur.

One might argue that measures producing country-specific assessments and overall rankings closest to the average across multiple measures have an inherent advantage. One foundation for such argument could be that such measures are likely to have more comprehensively included variables across the four categories of analysis. Yet it is also possible, even probable, that some variables and some categories are more important than others, so that universal inclusion (generally with equal weighting) is not necessarily superior to greater selectivity. Moreover, some variables could find their way into multiple measures simply because of their ready availability, such as life expectancy or current conflict, rather than demonstrated forecasting power.

Another argument for looking at the central tendency of multiple measures could be that such measures may be capturing the collective wisdom of common-thinking analysts. We argue, however, that it would better serve users to be very aware and appreciative of the underlying concepts of vulnerability that these works seek to evaluate. Whether the underlying concern is state failure, fragility, political risk, or some other variant makes a very considerable difference in how these projects determine indicators, score cases, and report results. Thus the use of them in practical applications such as in early warning should be closely attentive to the underlying conceptualization.

As a result, this study, like any other that has broken new ground, also sets the stage for future analysis, including exploration of the relationship between vulnerability measures and subsequent materialization or onset of conflict. Such understanding of predictive power would obviously be of great value.