choice because a loop of small transforms is always required. Is

it better to implement a hard-coded FFT of size 64, for example,

or an unrolled loop of four size-16 FFTs, both of which operate

on the same amount of data? The former should be more efficient

because it performs more computations with the same amount of

data, thanks to the logn factor in the FFT’s nlogn complexity.)

10One practical difficulty is that some “optimizing” compilers will tend to

greatly re-order the code, destroying FFTW’s optimal schedule. With GNU

gcc, we circumvent this problem by using compiler flags that explicitly disable certain stages of the optimizer.

Available for free at Connexions

<http://cnx.org/content/col10683/1.5>

177

In addition, there are many other techniques that FFTW employs

to supplement the basic recursive strategy, mainly to address the

fact that cache implementations strongly favor accessing consec-

utive data—thanks to cache lines, limited associativity, and direct

mapping using low-order address bits (accessing data at power-of-

two intervals in memory, which is distressingly common in FFTs,

is thus especially prone to cache-line conflicts). Unfortunately, the

known FFT algorithms inherently involve some non-consecutive

access (whether mixed with the computation or in separate bit-

reversal/transposition stages). There are many optimizations in

FFTW to address this. For example, the data for several butter-

flies at a time can be copied to a small buffer before computing

and then copied back, where the copies and computations involve

more consecutive access than doing the computation directly in-

place. Or, the input data for the subtransform can be copied from

(discontiguous) input to (contiguous) output before performing the

subtransform in-place (see "Indirect plans" (Section 11.5.2.4: Indirect plans)), rather than performing the subtransform directly out-

of-place (as in algorithm 1 (p. ??)). Or, the order of loops can

be interchanged in order to push the outermost loop from the first

radix step [the 2 loop in (11.2)] down to the leaves, in order to

make the input access more consecutive (see "Discussion" (Sec-

tion 11.5.2.6: Discussion)). Or, the twiddle factors can be com-

puted using a smaller look-up table (fewer memory loads) at the

cost of more arithmetic (see "Numerical Accuracy in FFTs" (Sec-

tion 11.7: Numerical Accuracy in FFTs)). The choice of whether

to use any of these techniques, which come into play mainly for

moderate n (213 < n < 220), is made by the self-optimizing planner

as described in the next section.

Available for free at Connexions

<http://cnx.org/content/col10683/1.5>

CHAPTER 11. IMPLEMENTING FFTS IN

178

PRACTICE

11.5 Adaptive Composition of FFT Algo-

rithms

As alluded to several times already, FFTW implements a wide va-

riety of FFT algorithms (mostly rearrangements of Cooley-Tukey)

and selects the “best” algorithm for a given n automatically. In this

section, we describe how such self-optimization is implemented,

and especially how FFTW’s algorithms are structured as a com-

position of algorithmic fragments. These techniques in FFTW are

described in greater detail elsewhere [134], so here we will focus

only on the essential ideas and the motivations behind them.

An FFT algorithm in FFTW is a composition of algorithmic steps

called a plan. The algorithmic steps each solve a certain class of

problems (either solving the problem directly or recursively break-

ing it into sub-problems of the same type). The choice of plan for

a given problem is determined by a planner that selects a compo-

sition of steps, either by runtime measurements to pick the fastest

algorithm, or by heuristics, or by loading a pre-computed plan.

These three pieces: problems, algorithmic steps, and the planner,

are discussed in the following subsections.

11.5.1 The problem to be solved

In early versions of FFTW, the only choice made by the planner

was the sequence of radices [131], and so each step of the plan took

a DFT of a given size n, possibly with discontiguous input/output,

and reduced it (via a radix r) to DFTs of size n/r, which were

solved recursively. That is, each step solved the following prob-

lem: given a size n, an input pointer I, an input stride ι, an out-

put pointer O, and an output stride o, it computed the DFT of

I [ ι] for 0 ≤

< n and stored the result in O [ko] for 0 ≤ k < n.

However, we soon found that we could not easily express many in-

teresting algorithms within this framework; for example, in-place

Available for free at Connexions

<http://cnx.org/content/col10683/1.5>

179

(I = O) FFTs that do not require a separate bit-reversal stage [195],

[375], [297], [166]. It became clear that the key issue was not the

choice of algorithms, as we had first supposed, but the definition of

the problem to be solved. Because only problems that can be ex-

pressed can be solved, the representation of a problem determines

an outer bound to the space of plans that the planner can explore,

and therefore it ultimately constrains FFTW’s performance.

The difficulty with our initial (n, I, ι, O, o) problem definition was

that it forced each algorithmic step to address only a single DFT. In

fact, FFTs break down DFTs into multiple smaller DFTs, and it is

the combination of these smaller transforms that is best addressed

by many algorithmic choices, especially to rearrange the order of

memory accesses between the subtransforms. Therefore, we rede-

fined our notion of a problem in FFTW to be not a single DFT, but

rather a loop of DFTs, and in fact multiple nested loops of DFTs.

The following sections describe some of the new algorithmic steps

that such a problem definition enables, but first we will define the

problem more precisely.

DFT problems in FFTW are expressed in terms of structures called

I/O tensors,11 which in turn are described in terms of ancillary

structures called I/O dimensions. An I/O dimension d is a triple

d = (n, ι, o), where n is a non-negative integer called the length,

ι is an integer called the input stride, and o is an integer called

the output stride. An I/O tensor t = {d1, d2, ..., d } is a set of I/O

ρ

dimensions. The non-negative integer ρ = |t| is called the rank

of the I/O tensor. A DFT problem, denoted by d f t (N, V, I, O),

consists of two I/O tensors N and V, and of two pointers I and

O. Informally, this describes |V| nested loops of |N|-dimensional

DFTs with input data starting at memory location I and output data

starting at O.

11I/O tensors are unrelated to the tensor-product notation used by some other authors to describe FFT algorithms [389], [296].

Available for free at Connexions

<http://cnx.org/content/col10683/1.5>

CHAPTER 11. IMPLEMENTING FFTS IN

180

PRACTICE

For simplicity, let us consider only one-dimensional DFTs, so that

N = {(n, ι, o)} implies a DFT of length n on input data with stride

ι and output data with stride o, much like in the original FFTW as

described above. The main new feature is then the addition of zero

or more “loops” V. More formally, d f t (N, {(n, ι, o)} ∪ V, I, O)

is recursively defined as a “loop” of n problems: for all 0 ≤ k <

n, do all computations in d f t (N, V, I + k · ι, O + k · o). The case

of multi-dimensional DFTs is defined more precisely elsewhere

[134], but essentially each I/O dimension in N gives one dimen-

sion of the transform.

We call N the size of the problem. The rank of a problem is de-

fined to be the rank of its size (i.e., the dimensionality of the DFT).

Similarly, we call V the vector size of the problem, and the vector

rank of a problem is correspondingly defined to be the rank of its

vector size. Intuitively, the vector size can be interpreted as a set

of “loops” wrapped around a single DFT, and we therefore refer to

a single I/O dimension of V as a vector loop. (Alternatively, one

can view the problem as describing a DFT over a |V|-dimensional

vector space.) The problem does not specify the order of execution

of these loops, however, and therefore FFTW is free to choose the

fastest or most convenient order.

11.5.1.1 DFT problem examples

A more detailed discussion of the space of problems in FFTW can

be found in [134] , but a simple understanding can be gained by

examining a few examples demonstrating that the I/O tensor repre-

sentation is sufficiently general to cover many situations that arise

in practice, including some that are not usually considered to be

instances of the DFT.

A single one-dimensional DFT of length n, with stride-1 in-

put X and output Y, as in (11.1), is denoted by the problem

d f t ({(n, 1, 1)}, {}, X, Y) (no loops: vector-rank zero).

Available for free at Connexions

<http://cnx.org/content/col10683/1.5>

181

As a more complicated example, suppose we have an n1 × n2

matrix X stored as n1 consecutive blocks of contiguous length-

n2 rows (this is called row-major format). The in-place DFT

of all the rows of this matrix would be denoted by the prob-

lem d f t ({(n2, 1, 1)}, {(n1, n2, n2)}, X, X): a length-n1 loop of size-

n2 contiguous DFTs, where each iteration of the loop offsets

its input/output data by a stride n2.

Conversely, the in-place

DFT of all the columns of this matrix would be denoted by

d f t ({(n1, n2, n2)}, {(n2, 1, 1)}, X, X): compared to the previous

example, N and V are swapped. In the latter case, each DFT

operates on discontiguous data, and FFTW might well choose to

interchange the loops: instead of performing a loop of DFTs com-

puted individually, the subtransforms themselves could act on n2-

component vectors, as described in "The space of plans in FFTW"

(Section 11.5.2: The space of plans in FFTW).

A size-1 DFT is simply a copy Y [0] = X [0], and here this can

also be denoted by N = {} (rank zero, a “zero-dimensional”

DFT). This allows FFTW’s problems to represent many kinds

of copies and permutations of the data within the same prob-

lem framework, which is convenient because these sorts of

operations arise frequently in FFT algorithms.

For exam-

ple, to copy n consecutive numbers from I to O, one would

use the rank-zero problem d f t ({}, {(n, 1, 1)}, I, O).

More in-

terestingly, the in-place transpose of an n1 × n2 matrix X

stored in row-major format, as described above, is denoted

by d f t ({}, {(n1, n2, 1) , (n2, 1, n1)}, X, X) (rank zero, vector-rank

two).

11.5.2 The space of plans in FFTW

Here, we describe a subset of the possible plans considered by

FFTW; while not exhaustive [134], this subset is enough to illus-

trate the basic structure of FFTW and the necessity of including

the vector loop(s) in the problem definition to enable several inter-

Available for free at Connexions

<http://cnx.org/content/col10683/1.5>

CHAPTER 11. IMPLEMENTING FFTS IN

182

PRACTICE

esting algorithms. The plans that we now describe usually perform

some simple “atomic” operation, and it may not be apparent how

these operations fit together to actually compute DFTs, or why cer-

tain operations are useful at all. We shall discuss those matters in

"Discussion" (Section 11.5.2.6: Discussion).

Roughly speaking, to solve a general DFT problem, one must per-

form three tasks. First, one must reduce a problem of arbitrary vec-

tor rank to a set of loops nested around a problem of vector rank

0, i.e., a single (possibly multi-dimensional) DFT. Second, one

must reduce the multi-dimensional DFT to a sequence of of rank-1

problems, i.e., one-dimensional DFTs; for simplicity, however, we

do not consider multi-dimensional DFTs below. Third, one must

solve the rank-1, vector rank-0 problem by means of some DFT

algorithm such as Cooley-Tukey. These three steps need not be

executed in the stated order, however, and in fact, almost every

permutation and interleaving of these three steps leads to a correct

DFT plan. The choice of the set of plans explored by the planner is

critical for the usability of the FFTW system: the set must be large

enough to contain the fastest possible plans, but it must be small

enough to keep the planning time acceptable.

11.5.2.1 Rank-0 plans

The rank-0 problem d f t ({}, V, I, O) denotes a permutation of the

input array into the output array. FFTW does not solve arbitrary

rank-0 problems, only the following two special cases that arise in

practice.

• When |V| = 1 and I = O, FFTW produces a plan that copies

the input array into the output array.

Depending on the

strides, the plan consists of a loop or, possibly, of a call to

the ANSI C function ♠❡♠❝♣②, which is specialized to copy

contiguous regions of memory.

• When |V| = 2, I = O, and the strides denote a matrix-

transposition problem, FFTW creates a plan that transposes

Available for free at Connexions

<http://cnx.org/content/col10683/1.5>

183

the array in-place. FFTW implements the square transposi-

tion d f t ({}, {(n, ι, o) , (n, o, ι)}, I, O) by means of the cache-

oblivious algorithm from [137], which is fast and, in theory,

uses the cache optimally regardless of the cache size (using

principles similar to those described in the section "FFTs and

the Memory Hierarchy" (Section 11.4: FFTs and the Mem-

ory Hierarchy)). A generalization of this idea is employed

for non-square transpositions with a large common factor or

a small difference between the dimensions, adapting algo-

rithms from [100].

11.5.2.2 Rank-1 plans

Rank-1 DFT problems denote ordinary one-dimensional Fourier

transforms. FFTW deals with most rank-1 problems as follows.

11.5.2.2.1 Direct plans

When the DFT rank-1 problem is “small enough” (usually, n ≤ 64),

FFTW produces a direct plan that solves the problem directly.

These plans operate by calling a fragment of C code (a codelet)

specialized to solve problems of one particular size, whose genera-

tion is described in "Generating Small FFT Kernels" (Section 11.6:

Generating Small FFT Kernels). More precisely, the codelets com-

pute a loop (|V| ≤ 1) of small DFTs.

11.5.2.2.2 Cooley-Tukey plans

For problems of the form d f t ({(n, ι, o)}, V, I, O) where n = rm,

FFTW generates a plan that implements a radix-r Cooley-Tukey

algorithm "Review of the Cooley-Tukey FFT" (Section 11.2: Re-

view of the Cooley-Tukey FFT). Both decimation-in-time and

decimation-in-frequency plans are supported, with both small fixed

Available for free at Connexions

<http://cnx.org/content/col10683/1.5>

CHAPTER 11. IMPLEMENTING FFTS IN

184

PRACTICE

radices (usually, r ≤ 64) produced by the codelet generator "Gen-

erating Small FFT Kernels" (Section 11.6: Generating Small FFT

√

Kernels) and also arbitrary radices (e.g. radix- n).

The most common case is a decimation in time (DIT)

plan,

corresponding to a radix r = n2 (and thus m =

n1) in the notation of "Review of the Cooley-Tukey FFT"

(Section 11.2:

Review of the Cooley-Tukey FFT): it first

solves d f t ({(m, r · ι, o)}, V ∪ {(r, ι, m · o)}, I, O), then multiplies

the output array O by the twiddle factors, and finally solves

d f t ({(r, m · o, m · o)}, V ∪ {(m, o, o)}, O, O). For performance, the

last two steps are not planned independently, but are fused together

in a single “twiddle” codelet—a fragment of C code that multiplies

its input by the twiddle factors and performs a DFT of size r, oper-

ating in-place on O.

11.5.2.3 Plans for higher vector ranks

These plans extract a vector loop to reduce a DFT problem to a

problem of lower vector rank, which is then solved recursively.

Any of the vector loops of V could be extracted in this way, lead-

ing to a number of possible plans corresponding to different loop

orderings.

Formally, to solve d f t (N, V, I, O), where V = {(n, ι, o)} ∪ V1,

FFTW generates a loop that, for all k such that 0 ≤ k < n, invokes

a plan for d f t (N, V1, I + k · ι, O + k · o).

11.5.2.4 Indirect plans

Indirect plans transform a DFT problem that requires some data

shuffling (or discontiguous operation) into a problem that requires

no shuffling plus a rank-0 problem that performs the shuffling.

Formally, to solve d f t (N, V, I, O) where |N| > 0, FFTW gener-

ates a plan that first solves d f t ({}, N ∪ V, I, O), and then solves

Available for free at Connexions

<http://cnx.org/content/col10683/1.5>

185

d f t (copy − o (N) , copy − o (V) , O, O).

Here we define copy −

o (t) to be the I/O tensor {(n, o, o) | (n, ι, o) ∈ t}: that is, it replaces

the input strides with the output strides. Thus, an indirect plan first

rearranges/copies the data to the output, then solves the problem in

place.

11.5.2.5 Plans for prime sizes

As discussed in "Goals and Background of the FFTW Project"

(Section 11.3: Goals and Background of the FFTW Project), it

turns out to be surprisingly useful to be able to handle large prime

n (or large prime factors). Rader plans implement the algorithm

from [309] to compute one-dimensional DFTs of prime size in

Θ (nlogn) time. Bluestein plans implement Bluestein’s “chirp-z”

algorithm, which can also handle prime n in Θ (nlogn) time [35],

[305], [278]. Generic plans implement a naive Θ n2 algorithm

(useful for n

100).

11.5.2.6 Discussion

Although it may not be immediately apparent, the combination

of the recursive rules in "The space of plans in FFTW" (Sec-

tion 11.5.2: The space of plans in FFTW) can produce a number

of useful algorithms. To illustrate these compositions, we discuss

three particular issues: depth- vs. breadth-first, loop reordering,

and in-place transforms.

Available for free at Connexions

<http://cnx.org/content/col10683/1.5>

CHAPTER 11. IMPLEMENTING FFTS IN

186

PRACTICE

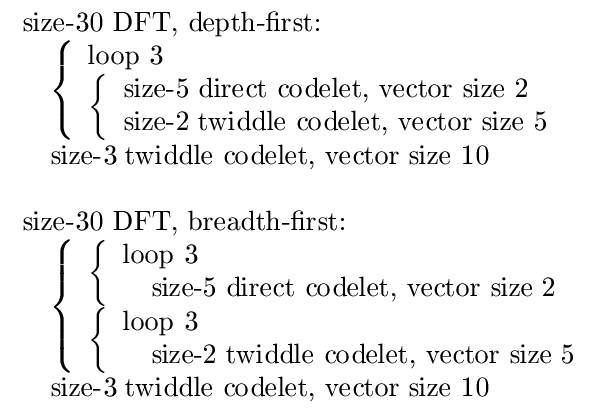

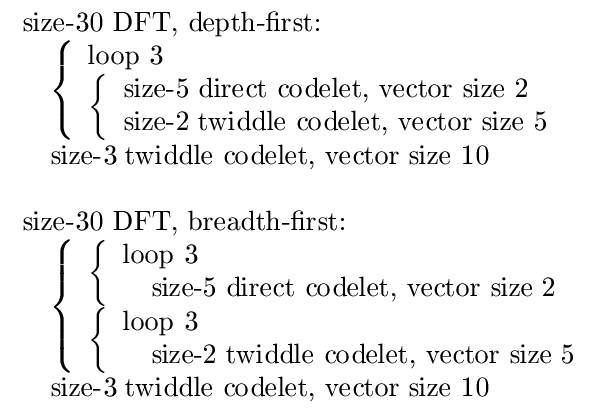

Figure 11.3: Two possible decompositions for a size-30 DFT,

both for the arbitrary choice of DIT radices 3 then 2 then

5, and prime-size codelets. Items grouped by a "{" result

from the plan for a single sub-problem. In the depth-first

case, the vector rank was reduced to zero as per "Plans for

higher vector ranks" (Section 11.5.2.3: Plans for higher vec-

tor ranks) before decomposing sub-problems, and vice-versa

in the breadth-first case.

As discussed previously in sections "Review of the Cooley-Tukey

FFT" (Section 11.2: Review of the Cooley-Tukey FFT) and "Un-

derstanding FFTs with an ideal cache" (Section 11.4.1: Under-

standing FFTs with an ideal cache), the same Cooley-Tukey de-

composition can be executed in either traditional breadth-first or-

der or in recursive depth-first order, where the latter has some

theoretical cache advantages. FFTW is explicitly recursive, and

thus it can naturally employ a depth-first order. Because its sub-

problems contain a vector loop that can be executed in a variety of

orders, however, FFTW can also employ breadth-first traversal. In

particular, a 1d algorithm resembling the traditional breadth-first

Available for free at Connexions

<http://cnx.org/content/col10683/1.5>

187

Cooley-Tukey would result from applying "Cooley-Tukey plans"

(Section 11.5.2.2.2: Cooley-Tukey plans) to completely factorize

the problem size before applying the loop rule "Plans for higher

vector ranks" (Section 11.5.2.3: Plans for higher vector ranks) to

reduce the vector ranks, whereas depth-first traversal would result

from applying the loop rule before factorizing each subtransform.

These two possibilities are illustrated by an example in Figure 11.3.

Another example of the effect of loop reordering is a style of plan

that we sometimes call vector recursion (unrelated to “vector-

radix” FFTs [114]). The basic idea is that, if one has a loop

(vector-rank 1) of transforms, where the vector stride is smaller

than the transform size, it is advantageous to push the loop towards

the leaves of the transform decomposition, while otherwise main-

taining recursive depth-first ordering, rather than looping “outside”

the transform; i.e., apply the usual FFT to “vectors” rather than

numbers. Limited forms of this idea have appeared for computing

multiple FFTs on vector processors (where the loop in question

maps directly to a hardware vector) [372]. For example, Cooley-

Tukey produces a unit input-stride vector loop at the top-level DIT

decomposition, but with a large output stride; this difference in

strides makes it non-obvious whether vector recursion is advanta-

geous for the sub-problem, but for large transforms we often ob-

serve the planner to choose this possibility.

In-place 1d transforms (with no separate bit reversal pass) can be

obtained as follows by a combination DIT and DIF plans "Cooley-

Tukey plans" (Section 11.5.2.2.2: Cooley-Tukey plans) with trans-

poses "Rank-0 plans" (Section 11.5.2.1: Rank-0 plans). First, the

transform is decomposed via a radix-p DIT plan into a vector of

p transforms of size qm, then these are decomposed in turn by a

radix-q DIF plan into a vector (rank 2) of p × q transforms of size

m. These transforms of size m have input and output at differ-

ent places/strides in the original array, and so cannot be solved

independently.

Instead, an indirect plan "Indirect plans" (Sec-

Available for free at Connexions

<http://cnx.org/content/col10683/1.5>

CHAPTER 11. IMPLEMENTING FFTS IN

188

PRACTICE

tion 11.5.2.4: Indirect plans) is used to express the sub-problem

as pq in-place transforms of size m, followed or preceded by an

m × p×q rank-0 transform. The latter sub-problem is easily seen to

be m in-place p × q transposes (ideally square, i.e. p = q). Related

strategies for in-place transforms based on small transposes were

described in [195], [375], [297], [166]; alternating DIT/DIF, with-

out concern for in-place operation, was also considered in [255],

[322].

11.5.3 The FFTW planner

Given a problem and a set of possible plans, the basic principle

behind the FFTW planner is straightforward: construct a plan for

each applicable algorithmic step, time the execution of these plans,

and select the fastest one. Each algorithmic step may break the

problem into subproblems, and the fastest plan for each subprob-

lem is constructed in the same way. These timing measurements

can either be performed at runtime, or alternatively the plans for a

given set of sizes can be precomputed and loaded at a later time.

A direct implementation of this approach, however, faces an expo-

nential explosion of the number of possible plans, and hence of the

planning time, as n increases. In order to reduce the planning time

to a manageable level, we employ several heuristics to reduce the

space of possible plans that must be compared. The most important

of these heuristics is dynamic programming [96]: it optimizes

each sub-problem locally, independently of the larger context (so

that the “best” plan for a given sub-problem is re-used whenever

that sub-problem is encountered). Dynamic programming is not