Skip a few years forward and you will find the designers of the original IBM PC making the justification that the largest mainframe in production only had 640K of RAM, so how could a toy computer need more? Not only did this thinking give us the magic 640K boundary you hear about in the DOS world, it also gave us that treacherous 384K region above it where adapter cards could be addressed, and memory managers attempted to load things high. In other words, we relived the memoryhardwarememory segmentation issues again.

Every computer operating system which allows for paging or swapping to disk must in some way deal with memory segmentation because you need to measure the amount of memory being moved around. What is inexcusable is the memory holes created by hardware designers. The combination of memory segmentation with memory holes made arrays far more complex than they needed to be in C/C++ and other languages.

We touched lightly on the PC memory model system in section 1.3. Each “segment” under DOS was 64K in size. DOS did not directly support paging and/or swapping to disk. An “address” under DOS fit into a longword and was of the form segment:offset. Every memory model except Compact used this type of address. Under the Compact memory model everything had to fit into one segment, so all pointers were only a word in size and they contained only the offset.

I am sorry that I have to expose you to this level of discussion, but, without understanding the vicious flaw created by the hardware geeks, you cannot begin to understand just how difficult arrays were to originally implement. Of course, you have to take a look at some pretty common code to understand why this was.

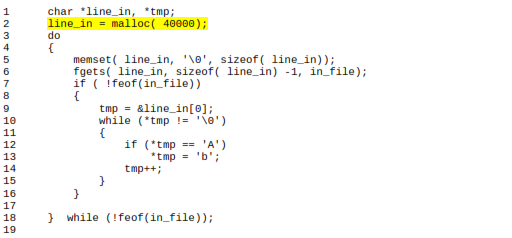

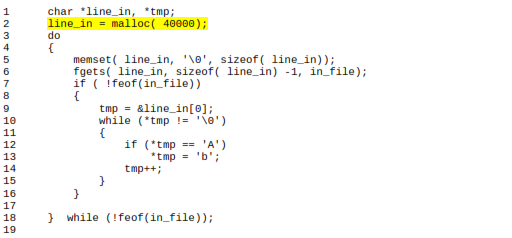

Other than a perplexing question as to why anybody would want to replace A with b in this manner, there is really nothing wrong with this code. I haven't compiled and tested it, but we aren't looking for a syntax error. (Okay, I didn't check for the malloc failure and didn't free, but that's not where we are headed with this discussion.) There was nothing in most compiler and operating system environments to stop malloc() from allocating memory across “segment boundaries.” It was not uncommon to see the exact same executable file run perfectly on many different DOSbased computers, then fail miserably on the next installation because that user had different drivers or something loaded which changed the starting address of the free heap. There were many compiler bugs involving failure to increment the segment when the offset rolled over.

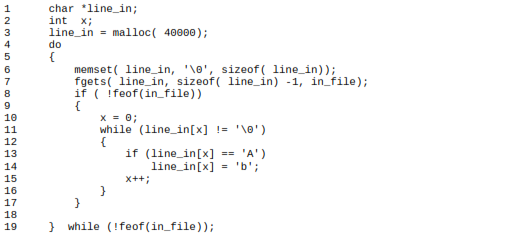

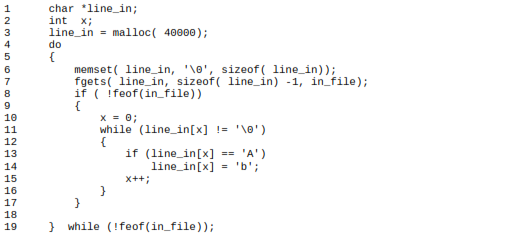

Had the above code been written to not use pointer math, it would have worked correctly in all environments.

The push to use pointers instead of this syntax came from many directions. First off, many of us were using desktop computers with a whopping 4.77Mhz clock speed. The CPU in your current cell phone runs rings around those old chips. We had to save clock cycles wherever we could and nearly every geek of the day could quote off the top of their head just how many clock cycles *tmp saved over line_in[x].

C++ had to maintain backward compatibility with C, so all “native or natural” data types had arrays created as they were in C, but all arrays of objects were created usin