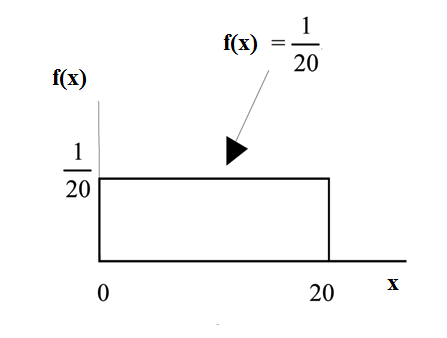

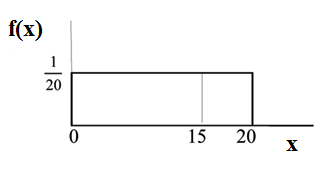

Consider the function

for

0≤x≤20.

x = a real number.

The graph of

for

0≤x≤20.

x = a real number.

The graph of

is a horizontal line. However, since

0≤x≤20

,

f(x) is restricted to

the portion between x=0 and

x=20, inclusive .

is a horizontal line. However, since

0≤x≤20

,

f(x) is restricted to

the portion between x=0 and

x=20, inclusive .

for

0≤x≤20.

for

0≤x≤20.

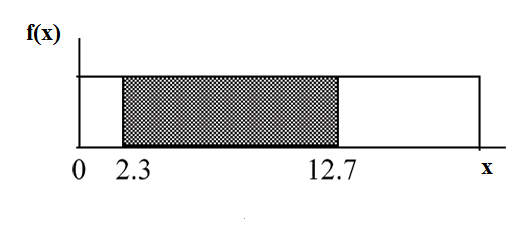

The graph of  is

a horizontal line segment

when

0≤x≤20.

is

a horizontal line segment

when

0≤x≤20.

The area between  where

0≤x≤20

and the x-axis is the area of a rectangle

with base = 20 and height =

where

0≤x≤20

and the x-axis is the area of a rectangle

with base = 20 and height = .

.

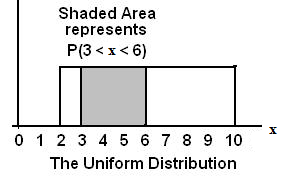

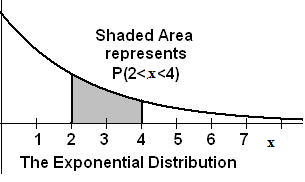

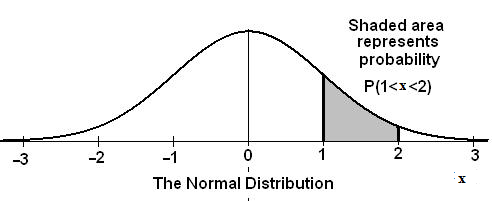

This particular function, where we have restricted x so that the area

between the function and the x-axis is 1, is an example of a continuous

probability density function. It is used as a tool to calculate

probabilities.

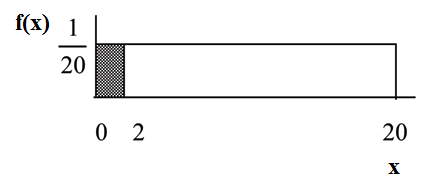

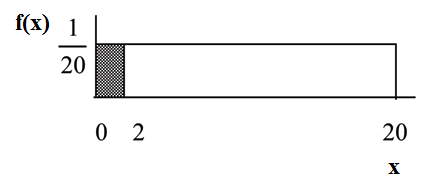

Suppose we want to find the area between  and the x-axis where 0<x<2

.

and the x-axis where 0<x<2

.

(2–0)=2= base of a rectangle

= the height.

= the height.

The area corresponds to a probability. The probability that x is between 0 and 2 is 0.1, which can be written mathematically as P(0<x<2) =P(x<2)=0.1.

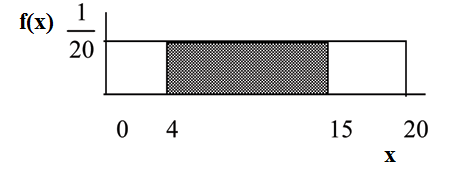

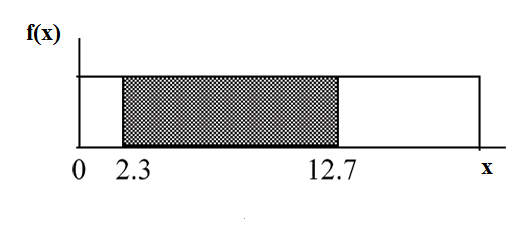

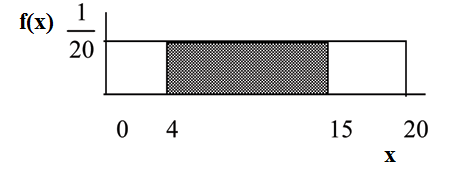

Suppose we want to find the area between  and the x-axis where 4<x<15

.

and the x-axis where 4<x<15

.

(15–4) = 11 = the base of a rectangle

= the height.

= the height.

The area corresponds to the probability

P(4<x<15)=0.55.

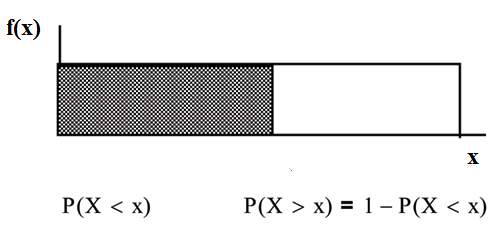

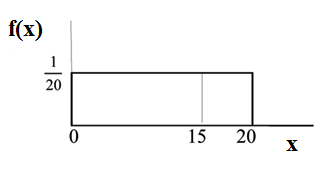

Suppose we want to find P(x = 15). On an x-y graph, x = 15 is a vertical line. A vertical

line has no width (or 0 width). Therefore,  .

.

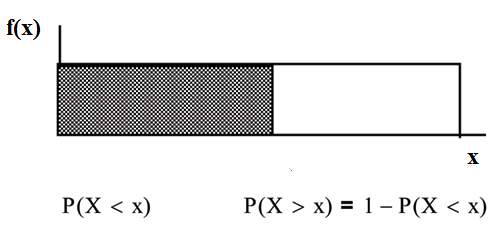

P(X≤x)

(can be written as

P(X<x)

for continuous distributions) is called the

cumulative distribution function or CDF. Notice the "less than or equal to" symbol. We

can use the CDF to calculate

P(X>x)

. The CDF gives "area to the left" and

P(X>x)

gives "area to the right." We calculate

P(X>x)

for continuous distributions as follows:

P(X>x)=1–P(X<x)

.

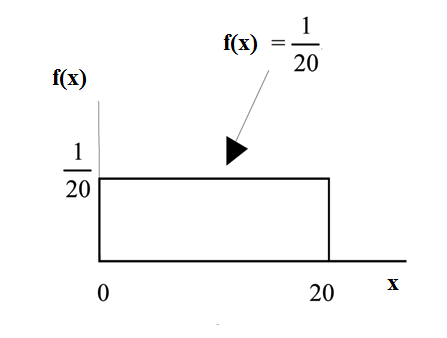

Label the graph with

f(x) and x.

Scale the x and y axes with the maximum x and y values.

,

0≤x≤20.

,

0≤x≤20.