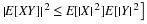

Examination of the independence concept reveals two important mathematical facts:

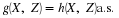

Independence of a class of non mutually exclusive events depends upon the probability

measure, and not on the relationship between the events. Independence cannot be displayed

on a Venn diagram, unless probabilities are indicated. For one probability measure

a pair may be independent while for another probability measure the pair may not be

independent.

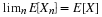

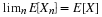

Conditional probability is a probability measure, since it has the three

defining properties and all those properties derived therefrom.

This raises the question: is there a useful conditional independence—i.e., independence

with respect to a conditional probability measure? In this chapter we explore that

question in a fruitful way.

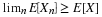

Among the simple examples of “operational independence" in the unit on independence

of events, which lead

naturally to an assumption of “probabilistic independence” are the following:

If customers come into a well stocked shop at different times, each unaware of the

choice made by the other, the the item purchased by one should not be affected by

the choice made by the other.

If two students are taking exams in different courses, the grade one makes

should not affect the grade made by the other.

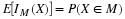

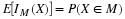

Example 5.1. Buying umbrellas and the weather

A department store has a nice stock of umbrellas. Two customers come into the

store “independently.” Let A be the event the first buys an umbrella and

B the event the second buys an umbrella. Normally, we should think the events

form an independent pair. But consider the effect of weather on

the purchases. Let C be the event the weather is rainy (i.e., is raining or

threatening to rain). Now we should think

form an independent pair. But consider the effect of weather on

the purchases. Let C be the event the weather is rainy (i.e., is raining or

threatening to rain). Now we should think

and

and  . The weather has a decided effect

on the likelihood of buying an umbrella. But given the fact the weather is rainy

(event C has occurred), it would seem reasonable that purchase of an umbrella

by one should not affect the likelihood of such a purchase by the other. Thus,

it may be reasonable to suppose

. The weather has a decided effect

on the likelihood of buying an umbrella. But given the fact the weather is rainy

(event C has occurred), it would seem reasonable that purchase of an umbrella

by one should not affect the likelihood of such a purchase by the other. Thus,

it may be reasonable to suppose

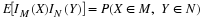

An examination of the sixteen equivalent conditions for independence, with probability

measure P replaced by probability measure PC, shows that we have independence

of the pair  with respect to the conditional probability measure

PC(·)=P(·|C). Thus, P(AB|C)=P(A|C)P(B|C). For this example,

we should also expect that

with respect to the conditional probability measure

PC(·)=P(·|C). Thus, P(AB|C)=P(A|C)P(B|C). For this example,

we should also expect that

, so that there is independence with respect to the

conditional probability measure

, so that there is independence with respect to the

conditional probability measure  . Does this make the pair

. Does this make the pair

independent (with respect to the prior probability measure P)? Some

numerical examples make it plain that only in the most unusual cases would the

pair be independent. Without calculations, we can see why this should be so. If

the first customer buys an umbrella, this indicates a higher than normal likelihood

that the weather is rainy, in which case the second customer is likely to buy.

The condition leads to P(B|A)>P(B). Consider the following numerical case.

Suppose

P(AB|C)=P(A|C)P(B|C) and

independent (with respect to the prior probability measure P)? Some

numerical examples make it plain that only in the most unusual cases would the

pair be independent. Without calculations, we can see why this should be so. If

the first customer buys an umbrella, this indicates a higher than normal likelihood

that the weather is rainy, in which case the second customer is likely to buy.

The condition leads to P(B|A)>P(B). Consider the following numerical case.

Suppose

P(AB|C)=P(A|C)P(B|C) and  and

and

Then

As a result,

(5.5)

P

(

A

)

P

(

B

)

=

0

.

0816

≠

0

.

1110

=

P

(

A

B

)

The product rule fails, so that the pair is not independent. An examination

of the pattern of computation shows that independence would require very special

probabilities which are not likely to be encountered.

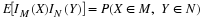

Example 5.2. Students and exams

Two students take exams in different courses, Under normal circumstances, one

would suppose their performances form an independent pair. Let A be the event the

first student makes grade 80 or better and B be the event the second has a grade

of 80 or better. The exam is given on Monday morning. It is the fall semester. There

is a probability 0.30 that there was a football game on Saturday, and both students

are enthusiastic fans. Let C be the event of a game on the previous Saturday. Now

it is reasonable to suppose

If we know that there was a Saturday game, additional knowledge that B has occurred

does not affect the lielihood that A occurs. Again, use of equivalent conditions

shows that the situation may be expressed

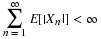

Under these conditions, we should suppose that  and

and

. If we knew that one did poorly on the exam, this would

increase the likelihoood there was a Saturday game and hence increase the

likelihood that the other did poorly. The failure to be independent arises from

a common chance factor that affects both. Although their performances are “operationally”

independent, they are not independent in the probability sense. As a numerical example, suppose

. If we knew that one did poorly on the exam, this would

increase the likelihoood there was a Saturday game and hence increase the

likelihood that the other did poorly. The failure to be independent arises from

a common chance factor that affects both. Although their performances are “operationally”

independent, they are not independent in the probability sense. As a numerical example, suppose

Straightforward calculations show  . Note that P(A|B)=0.8514>P(A) as would be expected.

. Note that P(A|B)=0.8514>P(A) as would be expected.

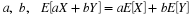

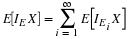

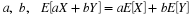

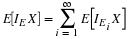

Sixteen equivalent conditions

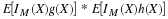

Using the facts on repeated conditioning and the equivalent conditions

for independence, we may produce a similar table of equivalent conditions

for conditional independence. In the hybrid notation we use for repeated

conditioning, we write

This translates into

If it is known that C has occurred, then additional knowledge of the

occurrence of B does not change the likelihood of A.

If we write the sixteen equivalent conditions for independence in terms of the

conditional probability measure  , then translate as

above, we have the following equivalent conditions.

, then translate as

above, we have the following equivalent conditions.

Table 5.1. Sixteen equivalent conditions|

P

(

A

|

B

C

)

=

P

(

A

|

C

)

|

P

(

B

|

A

C

)

=

P

(

B

|

C

)

|

P

(

A

B

|

C

)

=

P

(

A

|

C

)

P

(

B

|

C

)

|

|

|

|

|

|

|

|

|

|

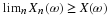

The patterns of conditioning in the examples above belong

to this set. In a given problem, one or the other of these conditions may seem a reasonable

assumption. As soon as one of these patterns is recognized, then

all are equally valid assumptions. Because of its simplicity and

symmetry, we take as the defining condition

the product ruleP(AB|C)=P(A|C)P(B|C).

Definition. A pair of events {A,B} is said to be

conditionally independent, givenC, designated  iff the following product rule holds: P(AB|C)=P(A|C)P(B|C).

iff the following product rule holds: P(AB|C)=P(A|C)P(B|C).

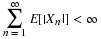

The equivalence of the four entries in the

right hand column of the upper part of the table, establish

The replacement rule

If any of the pairs  , or

, or  is conditionally independent, given C, then so are the others.

is conditionally independent, given C, then so are the others.

— □

This may be expressed by saying that if a pair is conditionally independent, we may replace

either or both by their complements and still have a conditionally independent pair.

To illustrate further the usefulness of this concept, we note some other common

examples in which similar conditions hold: there is

operational independence, but some chance factor which affects both.

In the examples considered so far, it has been reasonable to assume

conditional independence, given an event C, and conditional independence,

given the complementary event. But there are cases in which the effect of

the conditioning event is asymmetric. We consider several examples.

Two students are working on a term paper. They work quite separately. They

both need to borrow a certain book from the library. Let C be the event

the library has two copies available. If A is the event the first completes

on time and B the event the second is successful, then it seems reasonable

to assume  . However, if only one book is available, then

the two conditions would not be conditionally independent. In general

. However, if only one book is available, then

the two conditions would not be conditionally independent. In general

, since if the first student completes on time, then

he or she must have been successful in getting the book, to the detriment

of the second.

, since if the first student completes on time, then

he or she must have been successful in getting the book, to the detriment

of the second.

If the two contractors of the example above both need material which may be

in scarce supply, then successful completion would be conditionally independent,

give an adequate supply, whereas they would not be conditionally independent,

given a short supply.

Two students in the same course take an exam. If they prepared separately,

the event of both getting good grades should be conditionally independent. If

they study together, then the likelihoods of good grades would not be independent.

With neither cheating or collaborating on the test itself, if one