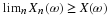

Suppose the pair  has the joint distribution

has the joint distribution

Table 14.1.

| 0 | 1 | 4 | 9 |

|

Y

=

2

| 0.05 | 0.04 | 0.21 | 0.15 |

| 0 | 0.05 | 0.01 | 0.09 | 0.10 |

| -1 | 0.10 | 0.05 | 0.10 | 0.05 |

| 0.20 | 0.10 | 0.40 | 0.30 |

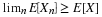

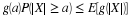

Calculate  for each possible value ti taken on by X

for each possible value ti taken on by X

=(–1·0.10+0·0.05+2·0.05)/0.20=0

E[Y|X=1]=(–1·0.05+0·0.01+2·0.04)/0.10=0.30

E[Y|X=4]=(–1·0.10+0·0.09+2·0.21)/0.40=0.80

E[Y|X=9]=(–1·0.05+0·0.10+2·0.15)/0.10=0.83

The pattern of operation in each case can be described as follows:

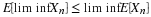

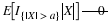

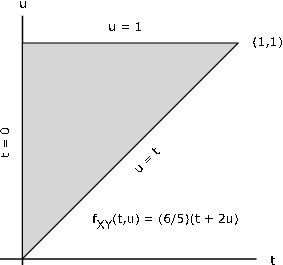

The following interpretation helps visualize the conditional expectation and points

to an important result in the general case.

For each ti we use the

mass distributed “above” it. This mass is distributed along a vertical line at values uj

taken on by Y. The result of the computation is to determine the center of mass for

the conditional distribution above t=ti. As in the case of ordinary expectations, this should

be the best estimate, in the mean-square sense, of Y when X=ti. We examine that possibility

in the treatment of the regression problem in the section called “The regression problem”.

Although the calculations are not difficult for a problem of this size, the basic pattern can be

implemented simply with MATLAB, making the handling of much larger problems quite easy. This

is particularly useful in dealing with the simple approximation to an absolutely

continuous pair.

X = [0 1 4 9]; % Data for the joint distribution

Y = [-1 0 2];

P = 0.01*[ 5 4 21 15; 5 1 9 10; 10 5 10 5];

jcalc % Setup for calculations

Enter JOINT PROBABILITIES (as on the plane) P

Enter row matrix of VALUES of X X

Enter row matrix of VALUES of Y Y

Use array operations on matrices X, Y, PX, PY, t, u, and P

EYX = sum(u.*P)./sum(P); % sum(P) = PX (operation sum yields column sums)

disp([X;EYX]') % u.*P = u_j P(X = t_i, Y = u_j) for all i, j

0 0

1.0000 0.3000

4.0000 0.8000

9.0000 0.8333

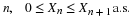

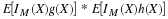

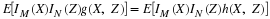

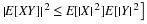

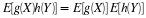

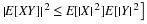

The calculations extend to  . Instead of values of uj we use

values of

. Instead of values of uj we use

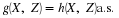

values of  in the calculations. Suppose Z=g(X,Y)=Y2–2XY.

in the calculations. Suppose Z=g(X,Y)=Y2–2XY.

G = u.^2 - 2*t.*u; % Z = g(X,Y) = Y^2 - 2XY

EZX = sum(G.*P)./sum(P); % E[Z|X=x]

disp([X;EZX]')

0 1.5000

1.0000 1.5000

4.0000 -4.0500

9.0000 -12.8333