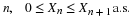

The number of customers in a major appliance store is equally likely to be 1, 2, or 3.

Each customer buys 0, 1, or 2 items with respective probabilities 0.5, 0.4, 0.1.

Customers buy independently, regardless of the number of customers.

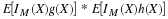

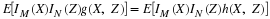

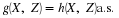

First we determine the matrices representing gN and gY. The coefficients

are the probabilities that each

integer value is observed. Note that the zero coefficients for any

missing powers must be included.

gN = (1/3)*[0 1 1 1]; % Note zero coefficient for missing zero power

gY = 0.1*[5 4 1]; % All powers 0 thru 2 have positive coefficients

gend

Do not forget zero coefficients for missing powers

Enter the gen fn COEFFICIENTS for gN gN % Coefficient matrix named gN

Enter the gen fn COEFFICIENTS for gY gY % Coefficient matrix named gY

Results are in N, PN, Y, PY, D, PD, P

May use jcalc or jcalcf on N, D, P

To view distribution for D, call for gD

disp(gD) % Optional display of complete distribution

0 0.2917

1.0000 0.3667

2.0000 0.2250

3.0000 0.0880

4.0000 0.0243

5.0000 0.0040

6.0000 0.0003

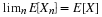

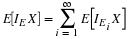

EN = N*PN'

EN = 2

EY = Y*PY'

EY = 0.6000

ED = D*PD'

ED = 1.2000 % Agrees with theoretical EN*EY

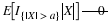

P3 = (D>=3)*PD'

P3 = 0.1167

[N,D,t,u,PN,PD,PL] = jcalcf(N,D,P);

EDn = sum(u.*P)./sum(P);

disp([N;EDn]')

1.0000 0.6000 % Agrees with theoretical E[D|N=n] = n*EY

2.0000 1.2000

3.0000 1.8000

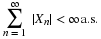

VD = (D.^2)*PD' - ED^2

VD = 1.1200 % Agrees with theoretical EN*VY + VN*EY^2