Color is a perceptual phenomenon related to the human response to

different wavelengths of light, mainly in the region of 400 to 700

nanometers (nm).

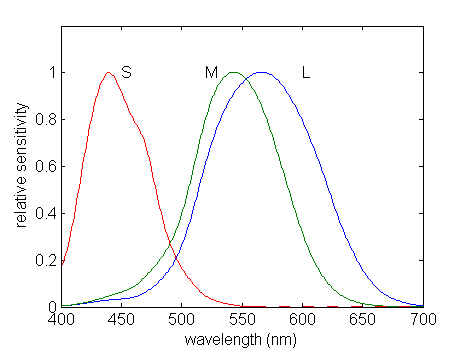

The perception of color arises from the sensitivities of three types of

neurochemical sensors in the retina, known as the long (L),

medium (M), and short (S) cones.

The response of these sensors to photons

is shown in Figure 17.1.

Note that each sensor responds to a range of wavelengths.

Due to this property of the human visual system, all colors can be modeled as

combinations of the three primary color components: red (R), green (G),

and blue (B).

For the purpose of standardization, the CIE (Commission

International de l'Eclairage — the International Commission on Illumination)

designated the following wavelength values for the

three primary colors: blue = 435.8nm, green = 546.1nm, and

red = 700nm.

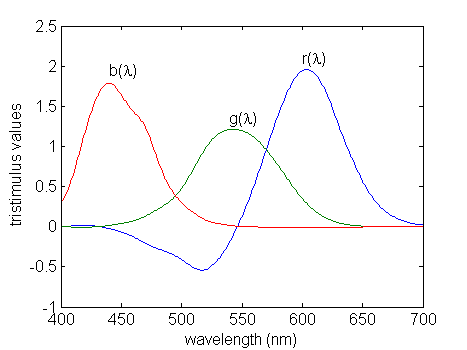

The relative

amounts of the three primary colors of light required to produce a color

of a given wavelength are called tristimulus values.

Figure 17.2 shows the

plot of tristimulus values using the CIE primary colors.

Notice that some of the tristimulus values are negative, which

indicates that colors at those

wavelengths cannot be reproduced by the CIE primary colors.

Download the files

girl.tif and ycbcr.mat.

For help on image command select the link.

You will be displaying both color and monochrome images in the following

exercises.

Matlab's image command can be used for both image types, but

care must be taken for the command to work properly.

Please see the

help on the image command

for details.

Download the RGB color image file

girl.tif

,

and load it into Matlab using the imread command.

Check the size of the Matlab array for this image by typing whos.

Notice that this is a three dimensional array of type uint8.

It contains three gray scale image planes corresponding to the red, green, and

blue components for each pixel.

Since each color pixel is represented by

three bytes, this is commonly known as a 24-bit image.

Display the color image using

image(A);

axis('image');

where A is the 3-D RGB array.

You can extract each of the color components using the following commands.

RGB = imread('girl.tif'); % color image is loaded into matrix RGB

R = RGB(:,:,1); % extract red component from RGB

G = RGB(:,:,2); % extract green component from RGB

B = RGB(:,:,3); % extract blue component from RGB

Use the subplot and image commands

to plot the original image, along with each of the three color components.

Note that while the original is a color image, each color component

separately is a monochrome image.

Use the syntax subplot(2,2,n)

, where n=1,2,3,4, to place the four

images in the same figure.

Place a title on each of the images, and print the figure

(use a color printer).

We will now examine the YCbCr color space representation.

Download the file

ycbcr.mat

,

and load it into

Matlab using load ycbcr.

This file contains a Matlab array for a color image in YCbCr format.

The array contains

three gray scale image planes that correspond to the luminance (Y) and

two chrominance (CbCr) components.

Use subplot(3,1,n)

and image to display each of the components

in the same figure.

Place a title on each of the three monochrome images, and print the figure.

In order to properly display this color image,

we need to convert it to RGB format.

Write a Matlab function that will perform the transformation of

Equation 17.2.

It should accept a 3-D YCbCr image array as

input, and return a 3-D RGB image array.

Now, convert the ycbcr array to an RGB representation and

display the color image.

Remember to convert the result to type uint8 before using

the image command.

An interesting property of the human visual system, with respect to

the YCbCr color space, is that we are much more

sensitive to distortion in the luminance component than in the chrominance

components. To illustrate this, we will smooth each of these components

with a Gaussian filter and view the results.

You may have noticed when you loaded ycbcr.mat into Matlab that you

also loaded a 5×5 matrix, h. This is a

5×5 Gaussian filter with σ2=2.0.

(See the first week of the experiment for more details on this type of

filter.)

Alter the ycbcr array by filtering only the luminance component,

ycbcr(:,:,1)

,

using the Gaussian filter (use the filter2 function).

Convert the result to RGB, and display it using image.

Now alter ycbcr by filtering both chrominance components,

ycbcr(:,:,2) and ycbcr(:,:,3), using the Gaussian filter.

Convert this result to RGB, and display it using image.

Use subplot(3,1,n)

to place the original and two filtered versions

of the ycbcr image in the same figure.

Place a title on each of the images, and print the figure (in color).

Do you see a significant difference between the filtered versions and

the original image?

This is the reason that YCbCr is often used for digital video. Since

we are not very sensitive to corruption of the chrominance components, we

can afford to lose some information in the encoding process.

INLAB REPORT

Submit the figure containing the components of girl.tif.

Submit the figure containing the components of ycbcr.

Submit your code for the transformation from YCbCr to RGB.

Submit the figure containing the original and filtered versions

of ycbcr. Comment on the result of filtering the luminance

and chrominance components of this image. Based on this,

what conclusion can you draw about the human visual system?