4. STRUCTURE

Structure analytics

Agents : System analysts, scientists, engineers;

Moves: Design and configure

- Organization structure

-

- Technology and medical science forums

- National level : Government, NGOs, research organizations, ;

- International level : strategic alliance among global organizations (nations, health, child);

- System architecture ; Innovate a set of emerging technologies as per the goals of healthcare security;

-

- Level 1: Biomedical, biotechnology, pharmacy, pharmaceuticals, information technology, electrical, electronics, chemical, mechanical and civil engineering;

- Level 2: Identify fundamental building blocks of information technology - Computing schema, Data schema : database, big data analytics; Networking schema : web connectivity; security schema, application schema.

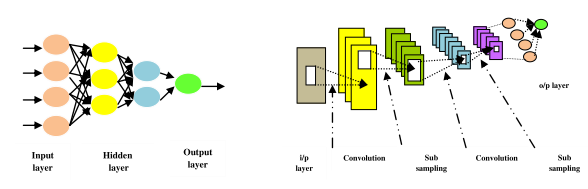

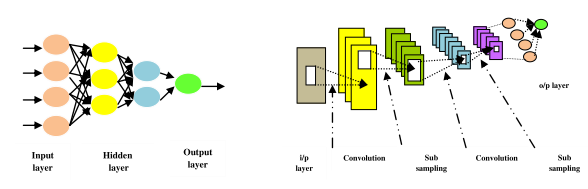

Figure 5.2: Deep learning architecture

Dr, Jim Morrison, Prof. Michel Bolton and Prof. David Nissim are analyzing the structure of the systems for health security. The first issue is DNN System Architecture: There are several classes of deep learning architectures used for biological data analysis. A convolution neural network (CNN) has a deep architecture with several convolutional and sub-sampling layers. Stacked auto- encoder consists of multiple sparse autoencoders. A deep belief network (DBN) freezes the weights of previous layers and feed the output to the next layer. Restricted Boltzmann machine includes a visible layer and a layer of hidden units. Mammography is a method to screen breast cancer. Breast masses are often misdiagnosed due to variability in mass appearance and low signal-to-noise ratio. Convolutional Neural Networks can be an interesting option to classify breast masses in mammograms as benign or malignant using transfer learning, and efficient data processing strategies.

Deep learning represents a class of machine learning techniques that exploit many layers of non-linear information processing for supervised or unsupervised feature extraction and transformation, pattern recognition (e.g. classification). It is used for learning multiple levels of representation to model complex relationships among data. Higher level features and concepts are defined in terms of lower level ones and such a hierarchy of features is known as deep architecture. Deep learning is based on learning representations. An observation such as an image can be represented in many ways like a vector of pixels, but some representations make it easier to learn from examples. Deep learning is a set of algorithms in machine learning to learn in multiple levels and at different levels of abstraction. It typically uses artificial neural networks such as multi-layer feedforward neural network and convolutional neural network.

There are three classes of deep learning architectures and techniques: (a) Deep networks for unsupervised or generative learning, (b) Deep networks for supervised learning and (c) hybrid deep networks. Unsupervised learning is used to capture high order correlation of the visible data when no information about target class labels is available. In case of supervised learning, target label data are always available in direct or indirect forms. Hybrid deep networks use both unsupervised and supervised learning techniques. Many machine learning techniques use shallow structured architectures consisting of at most one or two layers. Shallow architectures are effective in solving simple problems are not effective for complicated applications due to limited modeling and representational power. Human information processing mechanisms needs deep architectures for extracting complex structure and building internal representation from rich sensory inputs. The basic concept of deep learning comes from the domains of ANN, AI, graphical modeling, optimization, pattern recognition and signal processing. Deep learning has several advantages as compared to shallow architecture: increased chip processing abilities, significantly increased size of training data and recent advances in machine learning research have enabled the deep learning methods to exploit complex and nonlinear functions, to learn distributed and hierarchical feature representations and effective use of both labeled and unlabeled data.

Deep Learning is basically credit assignment in adaptive systems with long chains of causal links between actions and consequences. It is accurately assigning credit across many stages. A standard neural network consists of many simple connected processors or units each producing a sequence of real valued activations. Input units get activated through sensors perceiving the environment, other units through connections with weights from previously active units. Learning or credit assignment is to find weights that make the neural network exhibit desired behavior. A complex problem may require long causal chains of computational stages. Convolution Neural Networks (CNN) architecture are widely used for computer vision. The receptive field of a unit with given weight vector is shifted step by step across input values. The resulting array of subsequent activation events of a unit can provide inputs to higher level units.

The next critical issue is the structure of biomedical devices. The topology of technology should be analyzed in terms of circuit intelligence: nodes, connectivity, type of connections; layers, interfaces between layers and organization of layers. Today, it is hard to perceive a concrete picture of the aforesaid artificial biomedical devices from conceptual stage. The system architecture of the aforesaid biomedical devices is not yet transparent; it is not a simple design. The architecture depends on the mechanism of the biomedical device; and also computing, data, networking, application and security schema.

Tissue engineering is expected to be a good solution to the innovation of aforesaid artificial organs (e.g. artificial kidney, liver and pancreas) through cell and gene therapies. It is an emerging trend of biomedical engineering; the basic building blocks are cellular, organ and molecular biology, biotechnology, chemical and mechanical engineering and material science. It may be possible to reconstitute, maintain, simulate and improve tissue or organ functions in building artificial organs. It is an interesting research agenda whether it will be possible to replace physiological functions of diseased tissues and living organs with synthetic materials, biological compounds and cells. Finally, the expert panel are focusing on the structure of artificial immune system.

-

- Artificial immune system

- Intelligent broadcast communication system

o System : Radio, TV, Mobile phones, Social networking system, Knowledge management system;

o The sending agents broadcast data on epidemic assessment and mitigation plan, symptoms and causes of diseases.

o The receiving agents verify the security intelligence of broadcasted data such as false data injection, shilling and Sybil attacks.

o Real-time online data tracking system

-

- Real-time data tracking system of available healthcare services (e.g. free beds, paid beds) in hospitals and clinics at a particular zone (e.g. city, rural area, district);

- Real-time data tracking system of stock (or inventory) of medicines in retail outlets of drugs and medicines;

- Real-time data tracking system on epidemic and pandemic outbreak (e.g. [zone, country, state, district], infection, testing, recovery, death, suspected agents, prediction);

- E-governance system : Online grievance or complaint registration and tracking system for municipal corporation and gram panchayet

o Access online portal.

o Input : Enter personal data (name, address, phone number, email address, ward no.), complaint details (infection, death, gaps in municipal functions e.g. cleaning of drains, removal of garbage).

o Output : Get complaint registration number.

o Test, trace and track the status of action by municipal corporation / pnnchayet against complaint number.

-

- Telemedicine for treatment of epidemic and pandemic outbreak

Theorem : AIM verifies innate and adaptive system immunity in terms of collective, machine, security, collaborative and business intelligence through multi- dimensional view on intelligent reasoning.

The proposed mechanism (AIM) is defined by a set of elements : system, a group of agents, a finite set of inputs of each agent, a finite set of outcomes as defined by output function, a set of objective functions and constraints, payment function, an optimal set of moves, revelation principle and model checking or system verification protocol. The proposed mechanism evaluates the innate and adaptive immunity of a system which is defined by a set of states (e.g. initial, goal, local and global) and state transition relations.

The mechanism follows a set of AI moves. The basic building block of the mechanism is an analytics having multidimensional view of intelligent reasoning. Reasoning has multiple dimensions like common sense, automated theorem proving, planning, understanding, hypothetical, simulation dynamics and envisioning i.e. imagination or anticipation of alternatives. The inference engine selects appropriate reasoning techniques from a list of options such as logical, analytical, case based, forward and backward chaining, sequential, parallel, uncertainty, probabilistic, approximation, predictive, imaginative and perception based reasoning depending on the demand of an application. Another important move is the collection of evidence through private search which may require a balance between breadth and depth optimally. It is computationally hard to simulate the intelligence of a detective with high IQ in a mechanism; can you recall the reasoning style of Ace Ventura or Sharlock Homes to collect right evidence for solving critical cases!

The critical challenge is how to detect the danger signal from a system? The mechanism evaluates system immunity (i) combinatorially in terms of collective intelligence, machine intelligence, security intelligence, collaborative intelligence and business intelligence. The collective intelligence (a) is defined in terms of scope, input, output, process, agents and system dynamics. For a complex application, it verifies coordination and integration among system, strategy, structure, staff, style, skill and shared vision. What is being done by various components of a system? Who is doing? Why? How? Where? When? The machine intelligence (b) checks the system in terms of safety, liveness, concurrency, reachability, deadlock freeness, scalability and accuracy. For example, it should check preconditions, post conditions, triggering events, main flow, sub flow, alternate flow, exception flow, computational intelligence, communication cost, traffic congestion, time and space complexity, resources, capacity utilization, load, initial and goal states, local and global states and state transition plans of an information system.

The security intelligence (c) verifies the system in terms of authentication, authorization, correct identification, non-repudiation, integrity, audit and privacy; rationality, fairness, correctness, resiliency, adaptation, transparency, accountability, trust, commitment, reliability and consistency. The collaborative intelligence (d) evaluates the feasibility and effectiveness of human-computer interaction to achieve single or multiple set of goals, information sharing principle and negotiation protocol. The business intelligence (e) looks after business rules such as payment function, cost sharing, bonus, contractual clauses, quality, performance, productivity, incentive policy and competitive intelligence.

The basic protocol of AIM is based on bio-inspired artificial intelligence and immunological theories such as negative selection, danger signal detection, clonal selection, suppression and hyper mutation. Negative selection is an immune inspired classification scheme. For a given set of self sensor nodes, it generates a set D of detectors that do not match with any element of S. Then, these detectors are used to partition a monitor set M into self and non-self elements. The problem faced by human immune system is similar to that of information and communication technology schema. It is difficult to defend a system against a previously unknown danger. The only reliable knowledge is the normal behavior of the system which is equivalent to self nodes of the distributed system. Negative selection mimics the human immune system; it generates a set of detectors that do not match with self nodes, these detectors are used to monitor the abnormal behavior of the distributed system caused by the attack of non-self nodes. Danger threatens living organisms; the danger theory suggests that the human immune system detects danger to trigger appropriate immune responses. The optimal trade-off between the concentration of danger and safe signals within human tissues produce proper immune responses. Danger also threatens distributed computing systems. Danger theory can be applicable to intrusion detection. It is an interesting option to build a computational model which can define and detect danger signals. The danger signals should be detected fast and automatically to minimize the impact of malicious attacks by the intruders on a distributed system.

The mechanism verifies system immunity through a set of verification algorithms. It is possible to follow various strategies like model checking, simulation, testing and deductive reasoning for automated verification. Simulation is done on the model while testing is performed on the actual product. It checks the correctness of output for a given input. Deductive reasoning tries to check the correctness of a system using axioms and proof rules. There is risk of state space explosion problem in case of a complex system with many components interacting with one another; it may be hard to evaluate the efficiency of coordination and integration appropriately. Some applications also demand semi-automated and natural verification protocol. The mechanism calls threat analytics and assesses risks of single or multiple attacks on the system under consideration: analyze performance, sensitivity, trends, exception and alerts; checks what is corrupted or compromised: agents, protocol, communication, data, application and computing schema? Performs time series analysis: what occurred? what is occuring? what will occur? assess probability of occurrence and impact; explores insights : how and why did it occur? do cause- effect analysis; recommends : what is the next best action? predicts: what is the best or worst that can happen?

Theorem: The computational intelligence is associated with the cost of verification algorithms of system immunity and the complexity of threat analytics.

The verification system requires both automated and semi-automated verification options. The verification system calls threat analytics and a set of model checking algorithms for various phases : exploratory phase for locating errors, fault finding phase through cause effect analysis, diagnostics tool for program model checking and real-time system verification. Model checking is basically the process of automated verification of the properties of the system under consideration. Given a formal model of a system and property specification in some form of computational logic, the task is to validate whether or not the specification is satisfied in the model. If not, the model checker returns a counter example for the system’s flawed behavior to support the debugging of the system. Another important aspect is to check whether or not a knowledge based system is consistent or contains anomalies through a set of diagnostics tools.

There are two different phases : explanatory phase to locate errors and fault finding phase to look for short error trails. Model checking is an efficient verification technique for communication protocol validation, embedded system, software programmers’, workflow analysis and schedule check. The basic objective of the model checking algorithm is to locate errors in a system efficiently. If an error is found, the model checker produces a counter example how the errors occur for debugging of the system. A counter example may be the execution of the system i.e. a path or tree. A model checker is expected to find out error states efficiently and produce a simple counterexample. There are two primary approaches of model checking: symbolic and explicit state. Symbolic model checking applies to a symbolic representation of the state set for property validation. Explicit state approach searches the global state of a system by a transition function. The efficiency of model checking algorithms is measured in terms of automation and error reporting capabilities. The computational intelligence is also associated with the complexity of threat analytics equipped with the features of data visualization and performance measurement.

The computational intelligence may be associated with four decisions depending on the type of an application: encoding, similarity measure, selection and mutation. Antigens and antibodies are encoded in the same way. An antigen is the target or solution. The antibodies are the remainder of the data. After the fixing of efficient encoding and suitable similarity measure, the algorithm performs selection and mutation both based on similarity measure until stopping criteria are met.

The threat analytics analyze system performance, sensitivity, trends, exception and alerts along two dimensions: time and insights. The analysis on time dimension may be as follows: what is corrupted or compromised in the system: agents, communication schema, data schema, application schema, computing schema and protocol? what occurred? what is occuring? what will occur? Assess probability of occurrence and impact. The analysis on insights may be as follows: how and why did the threat occur? What is the output of cause-effect analysis? The analytics also recommends what is the next best action? It predicts what is the best or worst that can happen?