Finding the average value of a set of random signals or random

variables is probably the most fundamental concepts we use in

evaluating random processes through any sort of statistical

method. The mean of a random process is the average

of all realizations of that process. In order to

find this average, we must look at a random signal over a

range of time (possible values) and determine our average from

this set of values. The mean, or average, of a

random process,

x(t)

,

is given by the following equation:

()

This equation may seem quite cluttered at first glance, but we

want to introduce you to the various notations used to

represent the mean of a random signal or process. Throughout

texts and other readings, remember that these will all equal

the same thing. The symbol,

μx(t)

, and the X with a bar

over it are often used as a short-hand to represent an

average, so you might see it in certain textbooks. The other

important notation used is,

E[X]

, which represents the "expected value of

X" or the mathematical

expectation. This notation is very common and will appear

again.

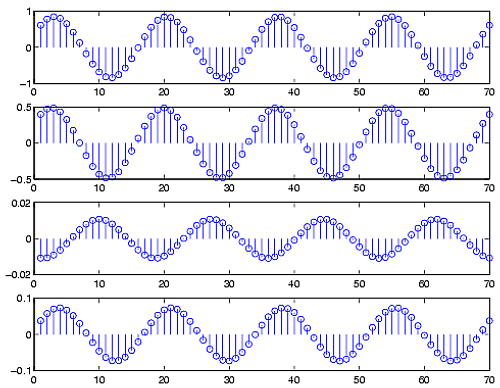

If the random variables, which make up our random process, are

discrete or quantized values, such as in a binary process,

then the integrals become summations over all the possible

values of the random variable. In this case, our expected

value becomes

()

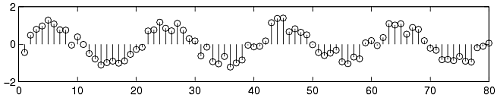

If we have two random signals or variables, their averages can

reveal how the two signals interact. If the product of the two individual

averages of both signals do not equal the

average of the product of the two signals, then the two

signals are said to be linearly independent, also

referred to as uncorrelated.

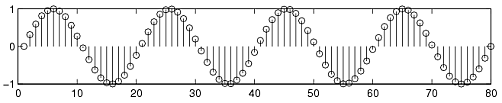

In the case where we have a random process in which only one

sample can be viewed at a time, then we will often not have

all the information available to calculate the mean using the

density function as shown above. In this case we must

estimate the mean through the time-av